Guillermo Rauch

About

@vercel CEO

Platforms

Content History

Some company news. We’re relocating our headquarters from San Francisco to… San Francisco. The best place to build. We’ll be shipping from our new, much bigger, office on Monday 😁

RT shadcn ✅@X is now using shadcn/ui. Joining xAI, Grok and Grokipedia.

Vercel’s automatic anomaly detection on point. This is a glimpse of the future of the cloud. Anomaly caught → AI investigation → pull request / action / analysis.GitHub Status: Git Operations is experiencing degraded performance. We are continuing to investigate. https://www.githubstatus.com/incidents/1jw8ltnr1qrj Link: https://x.com/githubstatus/status/1988986011171307808

AI products are constantly attacked and abused. @vercel BotID helps you fight abuse without degrading the UX nor hurting your top-of-funnel. 3 point plan: ① 𝚗𝚙𝚖 𝚒 𝚋𝚘𝚝𝚒𝚍 ② Run 𝚒𝚗𝚒𝚝𝙱𝚘𝚝𝙸𝚍() client-side ③ Use 𝚌𝚑𝚎𝚌𝚔𝙱𝚘𝚝𝙸𝚍().𝚒𝚜𝙱𝚘𝚝 server-sideVercel: Open-source AI, protected. @NousResearch used BotID to stop inference abuse and reduce costs on their AI workloads. https://vercel.com/blog/how-nous-research-used-botid-to-block-automated-abuse-at-scale Link: https://x.com/vercel/status/1988737312575127676

Browser makers: this is what peak new tab experience looks like. I love AI and imma let you finish, but I don't want to see the weather, the news, AOL slop. I press ⌘+T because I already know what I'm gonna do next, so your suggestions are actually noise. ps: believe it or not, to get this in Chrome you have to install an extension 🤨 I believe only Safari makes this extremely easy to set up (front and center of "General" settings)

I’m at the ER in San Francisco due to my wife’s sudden, acute appendicitis. Throughout the night and day you can hear the screams, dry-heaving, tussling, and cussing of drug-addicts in withdrawal. On the other hard, she also just received world-class, expedient medical care by lovely, dedicated medical professionals. What a roller coaster. We, as one American team, need to figure out how to end this opiate crisis. We're better than this. We can't have our neighbors and friends suffering on the streets, with drug dealers roaming around undeterred, and endless misery perpetuated under the veil of empathy.

This is so good @stripe 🤝 @v0 You can just monetize thingsv0: It's now even easier to accept payments with @stripe in v0. Link: https://x.com/v0/status/1988046297933508938

When Google App Engine came out (2008), its runtime was a strange "subset" of Python. You couldn't bind to C libraries, open sockets, run background threads… To fetch data you had to: 𝚏𝚛𝚘𝚖 𝚐𝚘𝚘𝚐𝚕𝚎.𝚊𝚙𝚙𝚎𝚗𝚐𝚒𝚗𝚎.𝚊𝚙𝚒 𝚒𝚖𝚙𝚘𝚛𝚝 𝚞𝚛𝚕𝚏𝚎𝚝𝚌𝚑 𝚛𝚎𝚜𝚞𝚕𝚝 = 𝚞𝚛𝚕𝚏𝚎𝚝𝚌𝚑.𝚏𝚎𝚝𝚌𝚑("𝚑𝚝𝚝𝚙://…") When AWS EC2 came out (2006), you could do ✨ anything ✨. Over time GCP realized that the restrictions did not make sense, and that people wanted to have full control and versioning over their runtimes. They didn't want "Google's Python", they wanted Python. AWS in the meantime accrued an insurmountable lead. We're now, strangely, seeing the same play out with JavaScript runtimes today. I fully expect the "VendorJS" days to be numbered. Save this tweet.

In 2025, you no longer have to write your application for a specific cloud vendor or runtime. You should be able to grab e.g. your @nodejs/@bunjavascript codebase, Postgres db, and run it anywhere. Your “lock-in” can actually be easily quantified: 𝚐𝚛𝚎𝚙 for the vendor name, deps, and specific runtime APIs in your codebase. Look at the length of your 𝚝𝚘𝚖𝚕 config. Watch for 𝚟𝚒𝚝𝚎-𝚙𝚕𝚞𝚐𝚒𝚗-𝚟𝚎𝚗𝚍𝚘𝚛 to paper over incompatibilities with the standard runtime. Our commitment is rooted both in portability and ease of use. We believe configuring your runtime or app *for* Vercel is not a good experience. And it’s altogether a net negative for the world.Vercel: The anti-vendor-lock-in cloud. Clouds can lock you in by forcing you to entangle your app’s codebase with their APIs and primitives. Not Vercel. Our approach, framework-defined infrastructure, maximizes both ease of use and portability. Learn how ↓ https://vercel.com/blog/vercel-the-anti-vendor-lock-in-cloud Link: https://x.com/vercel/status/1988012791752782244

Nature is healing: • Neon 𝚙𝚐 (TCP): 3.00𝚖𝚜 avg 🏆 • Neon HTTP: 4.50𝚖𝚜 avg • Neon WebSocket: 3.90𝚖𝚜 avg 🌊 Fluid solves the connection pooling issues of serverless. And works *best* with battle-tested clients like 𝚙𝚐. It’s the ideal backend runtime. No lock-in, no special caching or HTTP drivers, excellent in-cloud latency and security.Andre Landgraf: Fluid compute changes how serverless works on Vercel: - Classic serverless: Connection to db must be established on every run, so http/websocket were faster - Fluid: Can establish a db connection once and keep it open across many function calls, so pg tcp is now fastest Link: https://x.com/andrelandgraf/status/1987974748597555417

Vercel loves TanStack. We provided initial funding for its development. Huge respect for @tannerlinsley’s work, who is behind so many great React projects. Excited to support TanStack on Vercel, via Nitro. Beautiful example of the open web ecosystem coming together.Vercel Developers: Vercel supports TanStack Start through Nitro. Add 𝚗𝚒𝚝𝚛𝚘() to 𝚟𝚒𝚝𝚎.𝚌𝚘𝚗𝚏𝚒𝚐.𝚝𝚜 to easily deploy your projects: https://vercel.com/changelog/support-for-tanstack-start Link: https://x.com/vercel_dev/status/1987931072655773736

gm 🌊

RT Aravind Srinivas Early Comet Android users are completing their coding projects on Vercel from their phones now! A general agent on your phone is going to give you a ton of agency on the go. That's the goal. https://www.reddit.com/r/perplexity_ai/s/NlNeyCy35Y

English is the insurmountable advantage of the American empire. It’s the most beautiful, sophisticated, flexible language in the world. Like a good software system, it features progressive disclosure of complexity. It’s extremely easy to get started with, yet it takes decades to master. There’s a word, “phrasal verb”, or idiom for anything you want to express. It’s extensible and fluid: new words are invented all the time and easily assimilated. New science, new products, new movies, new memes… odds are they first arrive in English. Countries and individuals can simply get ahead with the one weird trick of pervasive English fluency. I love visiting places like 🇸🇪 or 🇳🇱 where everyone speaks English or makes the effort to.spaceman 🦕☄️🔥: I am a strong believer in the theory that China's massively delayed development is a direct result of their written language offering nearly zero clues as to how characters are pronounced, or even what they mean this is impacted further by the thousands necessary for literacy /1 Link: https://x.com/meteor_cultist/status/1987635110649217516

There’s a contagion effect to good habits. When you watch your friends or relatives exercise, you want to exercise. Same is true for shipping, giving back, eating well… Surround yourself with people who raise your bar.

RT Sébastien Chopin You can now "use workflow" with @nuxt_js https://useworkflow.dev/docs/getting-started/nuxt

Vercel Developers: Connections to all Vercel deployments are now secured with post-quantum encryption to protect against future quantum computing attacks. All modern browsers will use these new algorithms automatically during TLS handshakes. https://vercel.com/changelog/post-quantum-crypto Link: https://x.com/vercel_dev/status/1986898795473555506

Press ⎵, type in a phrase, and a blazing fast AI model kicks in to help you search: http://vercel.domains. This is super helpful to discover TLDs relevant to your business (there are over 1,000 gTLDs)Vercel Developers: Vercel Domains now supports AI search. Go beyond keywords, describe what you’re looking for. Try it now at http://vercel.com/domains Link: https://x.com/vercel_dev/status/1986870975477235928

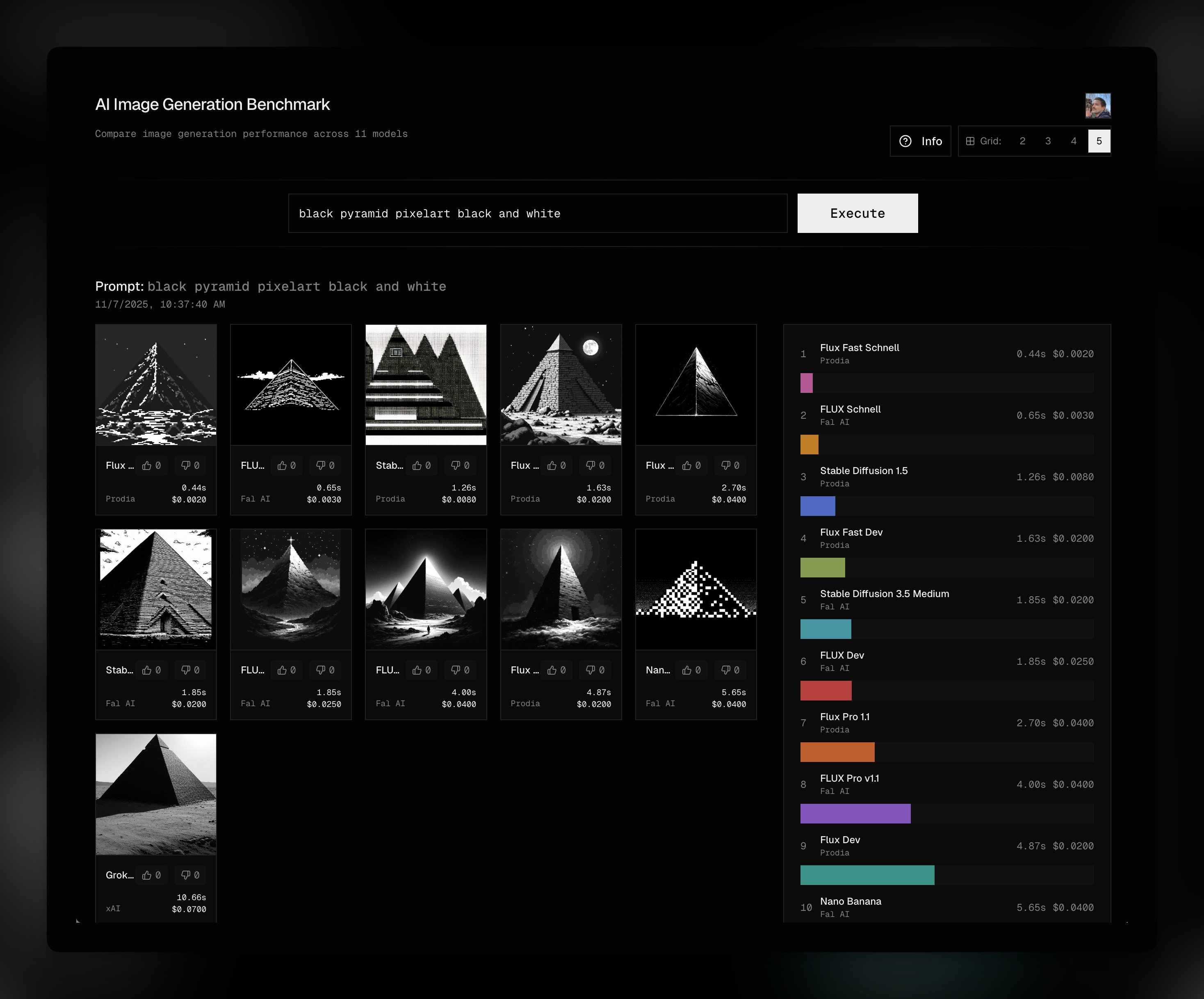

The price/performance range of image models is wild. This is a really cool @v0 to benchmark them. The fastest model, served by @prodialabs, takes ~400ms. The slowest, ~11s 😳 https://v0imgbench.vercel.app/Esteban Suárez: I generated an image benchmarking playground on @v0 – featuring models from @bfl_ml, @grok, @GoogleAIStudio and @StabilityAI served by @prodialabs and @fal. Try it here: https://v0imgbench.vercel.app Link: https://x.com/EstebanSuarez/status/1986865484315123874

𝚗𝚙𝚖 𝚒 𝚊𝚒 𝚗𝚙𝚖 𝚒 𝚗𝚎𝚡𝚝 𝚗𝚙𝚖 𝚒 𝚏𝚕𝚊𝚐𝚜 𝚗𝚙𝚖 𝚒 𝚠𝚘𝚛𝚔𝚏𝚕𝚘𝚠 𝚗𝚙𝚖 𝚒 -𝚐 𝚜𝚊𝚗𝚍𝚋𝚘𝚡 🆕

Get your own VPS (Virtual Private Sandbox) in one command: 𝚗𝚙𝚡 𝚜𝚊𝚗𝚍𝚋𝚘𝚡. The fastest way to spin up a sandbox in the cloud and test your agent’s environment.Vercel Developers: The Vercel Sandbox CLI is now available. ▲ ~ / 𝚗𝚙𝚡 𝚜𝚊𝚗𝚍𝚋𝚘𝚡 𝚛𝚞𝚗 Create, run, and manage secure, ephemeral Sandbox environments right from your terminal. Link: https://x.com/vercel_dev/status/1986810835738771574

Vercel’s AI products like @v0, Agent, and AI Gateway are built on Vercel. 🥵 Before: global accelerators (AGA), load balancers (NLB), TLS ingress (Nginx), Kubernetes (EKS / Karpenter), EC2, Terraform… 🌊 After: Fluid and 𝚐𝚒𝚝 𝚙𝚞𝚜𝚑 How to build your own Gateway, globally distributed, processing trillions of tokens:Vercel Developers: We build Vercel on Vercel. Vercel AI Gateway runs on Fluid compute, on the same network as you: • Concurrency keeps CPUs hot, costs low • Billed for CPU only when active • Anycast routing for low latency • Redis + in-memory caching • Built-in o11y https://vercel.com/blog/how-ai-gateway-runs-on-fluid-compute Link: https://x.com/vercel_dev/status/1986610302868447537

RT Vercel Introducing Snowflake on v0. • Ask questions about your data • Build data-driven Next.js apps • Deploy securely: compute in Snowflake, auth with Vercel Your trusted data, moving at the speed of AI.

RT Vercel Developers Free, invisible CAPTCHA in 3 steps: ① 𝚗𝚙𝚖 𝚒 𝚋𝚘𝚝𝚒𝚍 ② Run 𝚒𝚗𝚒𝚝𝙱𝚘𝚝𝙸𝚍() client-side ③ Use 𝚌𝚑𝚎𝚌𝚔𝙱𝚘𝚝𝙸𝚍().𝚒𝚜𝙱𝚘𝚝 server-sideVercel: Save your holiday traffic for customers, not bots. Enterprise and Pro teams can use Vercel BotID Deep Analysis free of charge through January 15. https://vercel.com/changelog/free-botid-deep-analysis Link: https://x.com/vercel/status/1986204259776667668

Activity on rauchg/blog

rauchg contributed to rauchg/blog

rauchg contributed to rauchg/blog

View on GitHub