Emad Mostaque

简介

Open Sovereign AI @ii_posts. Founder @StabilityAI.

平台

内容历史

Estimate average ~1k tokens per post => 100b tokens per day @grok 4 Fast $0.5/m tokens (vs $10 for GPT5, $15 for Sonnet 4.5) => $50k a day cost to process, $18m a year Value = huge Shows how amazing the price/performance of Grok 4 Fast isElon Musk: @Moimaere @levelsio @nikitabier By next month, Grok will literally look at and understand all ~100 million 𝕏 posts per day (including images and video), no matter how small the account, and recommend content to users based on the intrinsic quality of the content itself. This is only possible with advanced AI Link: https://x.com/elonmusk/status/1988662682241618367

AI multiple compression in action, now < 30x sales :o jk congrats to the Cursor team, many more tab keys to goCursor: We've raised $2.3B in Series D funding from Accel, Andreessen Horowitz, Coatue, Thrive, Nvidia, and Google. We're also happy to share that Cursor has grown to over $1B in annualized revenue and now produces more code than any other agent in the world. This funding will allow Link: https://x.com/cursor_ai/status/1988971258449682608

RT Henri Liriani We're launching Lightfield today. It's a CRM designed for founders going zero to one—shaped in the past year by hundreds of founders who took a bet on us and now use it daily, and a waitlist of 20,000 more. When starting out, it takes countless hours of talking to customers to figure out what works. From day zero, Lightfield builds and updates itself from your unstructured conversations with customers. It becomes your customer memory: helping you execute deals, communicate without missing details, and understand patterns across your business. It's free to try. Would love to hear what you think: http://lightfield.app

Just call it the Gabecube Hardware looks to be around AMD Ryzen Al Max+ 395 (strix halo) level Would be cool if RAM was upgradeable, can run 128 Gb for LLMs (see @FrameworkPuter Desktop)The Game Awards: First footage of Valve's Steam Machine, shipping in early 2026. 6x more powerful than a Steam Deck. No pricing announced. Link: https://x.com/geoffkeighley/status/1988669659881820383

RT Wes Roth thank you so much to @DrKnowItAll16 for highlighting our talk with @EMostaque It was a fascinating conversation. Emad is able to provide insight on a lot of subjects, but specifically we talked about the future of work and employment. I recommend watching this one asap.DrKnowItAll: This is a great interview with @EMostaque. I highly recommend watching it. Thanks, @WesRothMoney for making it happen! https://youtu.be/07fuMWzFSUw?si=Xa_faxDSJyuRl1-n Link: https://x.com/DrKnowItAll16/status/1987502478640693446

RT Shengyuan Hi Dzmitry, our INT4 QAT is weight-only with fake-quantization: we keep the original BF16 weights in memory, during the forward pass we on-the-fly quantize them to INT4 and immediately de-quantize back to BF16 for the actual computation. The original unquantized BF16 weight is retained because gradients have to be applied to this.🇺🇦 Dzmitry Bahdanau: can someone explain to me int4 training by @Kimi_Moonshot ? does it mean weights are stored in int4 and dequantized on the fly for futher fp8/bf16 computation? or does this mean actual calculations are in int4, including accumulators? Link: https://x.com/DBahdanau/status/1986821392206114972

Will continuous learning for AI models be solved within 2 years

RT Emad Will continuous learning for AI models be solved within 2 years

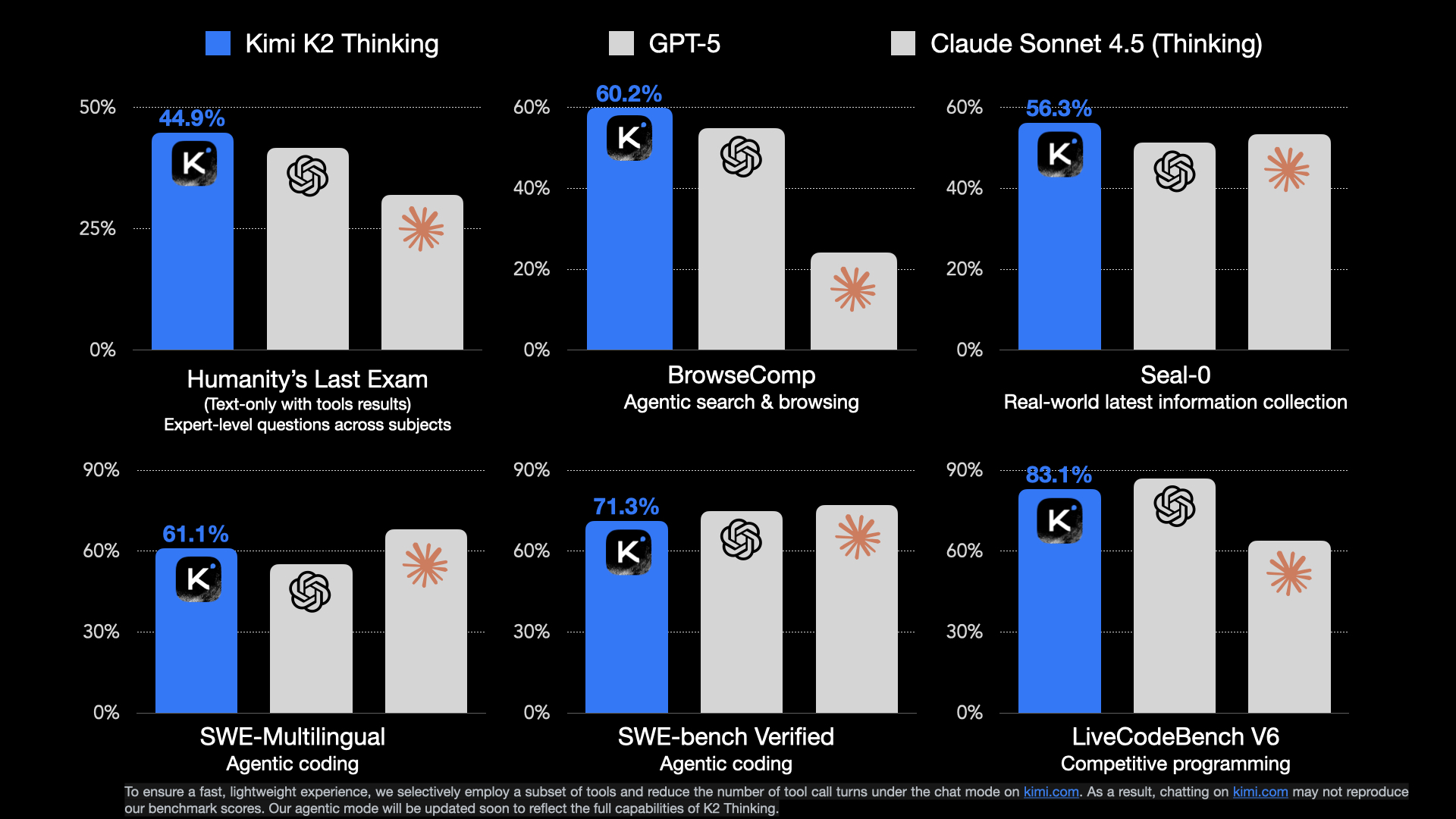

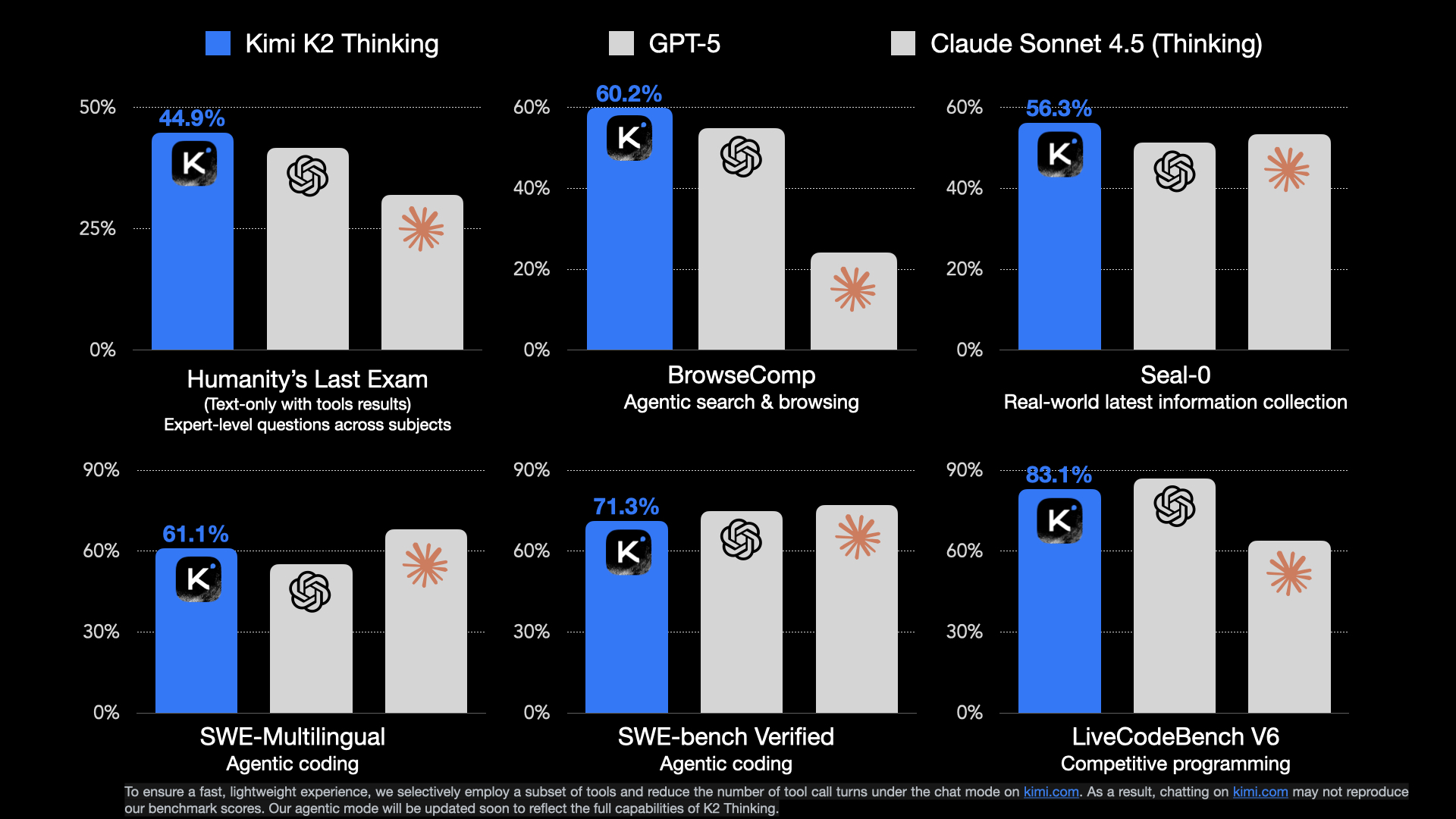

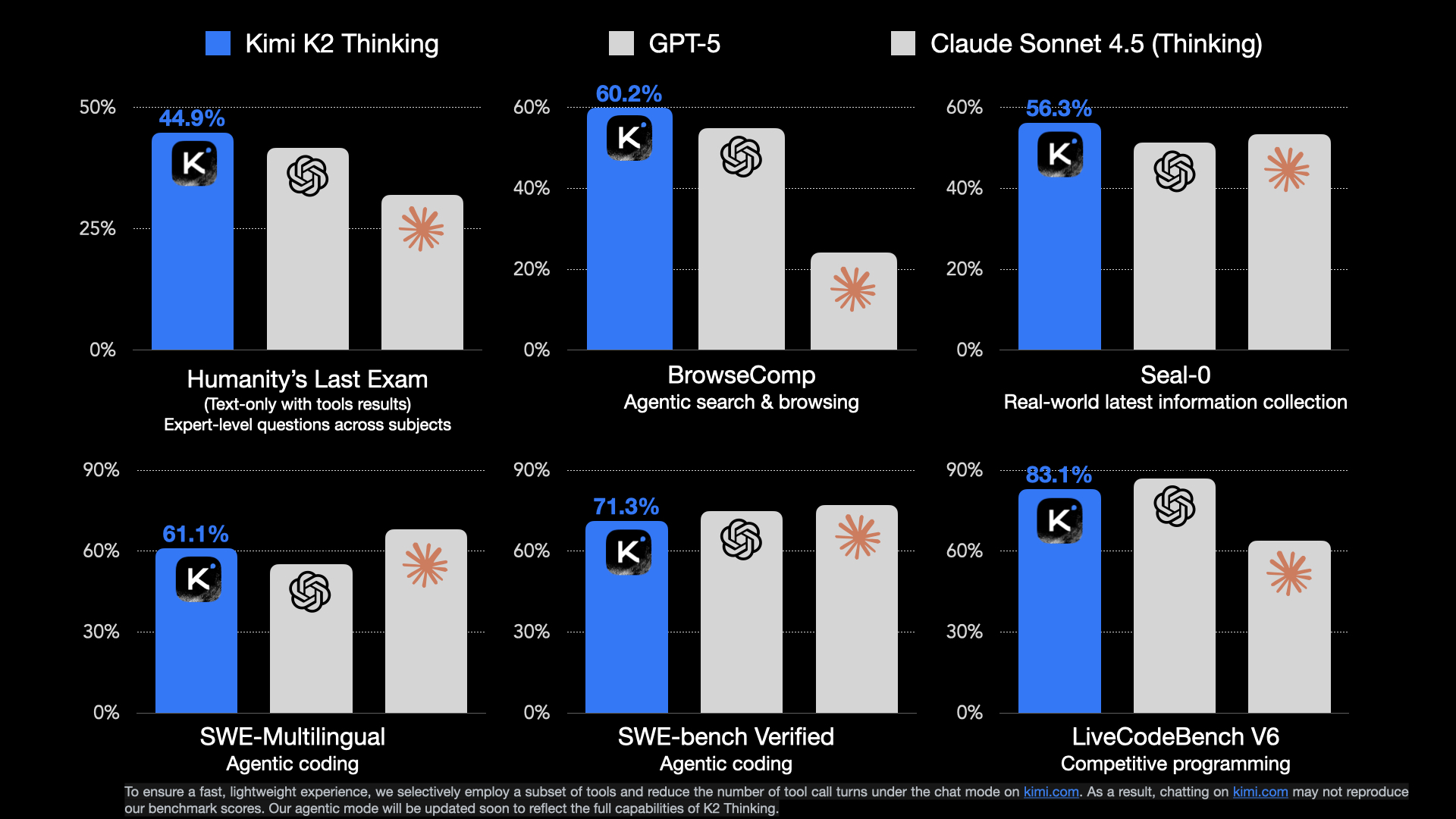

In a year or so this level of performance should be available on a 32b dense model (K2 is 32b active) at a cost of < $0.2/million tokens I don't think folk have that in their estimatesKimi.ai: 🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built Link: https://x.com/Kimi_Moonshot/status/1986449512538513505

No current AI systems have morals explicitly encoded into them at pretraining time. At the very least they should have Asimov's laws of robotics ehPope Leo XIV: Technological innovation can be a form of participation in the divine act of creation. It carries an ethical and spiritual weight, for every design choice expresses a vision of humanity. The Church therefore calls all builders of #AI to cultivate moral discernment as a Link: https://x.com/Pontifex/status/1986776900811837915

Can you imagine being a "frontier" lab that's raised like a billion dollars and now you can't release your latest model because it can't beat @Kimi_Moonshot ? 🗻 Sota can be a bitch if thats your targetEmad: Can you imagine being a "frontier" lab that's raised like a billion dollars and now you can't release your latest model because it can't beat deepseek? 🐳 Sota can be a bitch if thats your target Link: https://x.com/EMostaque/status/1881380253630890471

RT Pope Leo XIV Technological innovation can be a form of participation in the divine act of creation. It carries an ethical and spiritual weight, for every design choice expresses a vision of humanity. The Church therefore calls all builders of #AI to cultivate moral discernment as a fundamental part of their work—to develop systems that reflect justice, solidarity, and a genuine reverence for life.

RT gabriel every single person i know who made a cool video demo of a project that shows agency & great technical ability has been reached out to from top labs & top companies you never need to compete with millions of other people for your top pick role

Necessity is the mother of invention Also - training optimally on small amounts of chips with focus on data means the Chinese models take 10-100x less compute to run as well & have that cost advantage $150/mGPT 4.5 vs $0.5/m DeepSeek v3 etcYuchen Jin: If you ever wonder how Chinese frontier models like Kimi, DeepSeek, and Qwen are trained on far fewer (and nerfed) Nvidia GPUs than US models. In 1969, NASA’s Apollo mission landed people on the moon with a computer that had just 4KB of RAM. Creativity loves constraints. Link: https://x.com/Yuchenj_UW/status/1986474507771781419

I actually think all governments should offer infrastructure underwriting and guarantees for AI build out just as they do for other essential infrastructure - China does! The productive capacity of a country - its intelligent capital stock - is basically it’s GPUs & robotsSam Altman: I would like to clarify a few things. First, the obvious one: we do not have or want government guarantees for OpenAI datacenters. We believe that governments should not pick winners or losers, and that taxpayers should not bail out companies that make bad business decisions or Link: https://x.com/sama/status/1986514377470845007

A note on costs/compute Base Kimi K2 model used 2.8m H800 hours with 14.8 trillion tokens, about $5.6m worth Details of post training for reasoning not given, but it is likely max 20% more (excluding data prep!) Would be < $3m for sota if they had Blackwell chip accessKimi.ai: 🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built Link: https://x.com/Kimi_Moonshot/status/1986449512538513505

Congratulations to @Kimi_Moonshot for achieving state of the art on many benchmarks & open sourcing the model! The gap between closed & open continues to narrow even as the cost of increasingly economically valuable tokens collapses K2 has its own unique vibe too, try it out!Kimi.ai: 🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built Link: https://x.com/Kimi_Moonshot/status/1986449512538513505

Folk focusing on data centers when the real $$s will be in teleoperation centers

RT Emad Soon (hopefully)Unitree: Embodied Avatar: Full-body Teleoperation Platform🥳 Everyone has fantasized about having an embodied avatar! Full-body teleoperation and full-body data acquisition platform is waiting for you to try it out! Link: https://x.com/UnitreeRobotics/status/1986329686251872318

Soon (hopefully)Unitree: Embodied Avatar: Full-body Teleoperation Platform🥳 Everyone has fantasized about having an embodied avatar! Full-body teleoperation and full-body data acquisition platform is waiting for you to try it out! Link: https://x.com/UnitreeRobotics/status/1986329686251872318

RT Dheemanth Reddy here is maya1, our open source voice model: We’re building the future of voice intelligence @mayaresearch_ai team is incredible; amazing work by the team. remarkable moment.

RT DiscussingFilm Coca-Cola’s annual Christmas advert is AI-generated again this year. The company says they used even fewer people to make it — “We need to keep moving forward and pushing the envelope… The genie is out of the bottle, and you’re not going to put it back in”

RT BrianEMcGrath Amazing interview with @RaoulGMI and @EMostaque! Some takeaways from this conversation: 1. AI's (LLMs) use tokens that are equivalent to 1.3 words per token. 2. The avg person speaks 20,000 tokens / day. 3. The avg person thinks about 200,000 tokens / day. 4. The latest Grok 4 model costs $0.50 per 1 million tokens. So the current LLMs can do the equivalent of week's worth of knowledge work for $0.50 with an IQ higher than the avg person! ...and they will only get better. Things are changing rapidly.Raoul Pal: A conversation with @EMostaque will always blow your mind. This one is no exception... Enjoy! Link: https://x.com/RaoulGMI/status/1983883585586393195