Jeremy Howard

简介

🇦🇺 Co-founder: @AnswerDotAI & @FastDotAI ; Prev: professor @ UQ; Stanford fellow; @kaggle president; @fastmail/@enlitic/etc founder https://t.co/16UBFTX7mo

平台

内容历史

I agree with @dileeplearningDileep George: I agree with @ylecun Link: https://x.com/dileeplearning/status/1988320699493339284

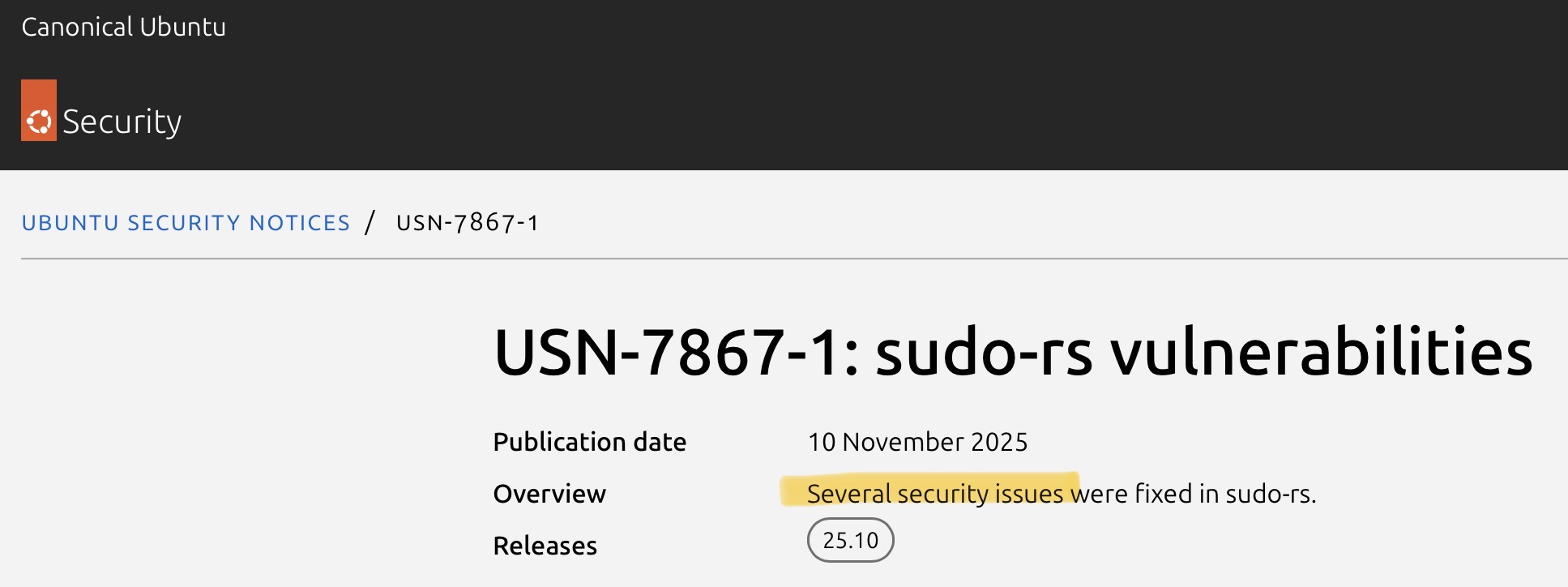

RT Antonio Sarosi Porting decades old programs to Rust is dumb. They already fixed countless bugs / security issues, spend the time fixing remaining issues instead of starting all over again and re-introducing them. Memory safety doesn't prevent dumb logical errors. Use Rust for new software.The Lunduke Journal: Multiple, serious security vulnerabilities found in the Rust clone of Sudo — which shipped with Ubuntu 25.10 (the most recent release). Not little vulnerabilities: We’re talking about the disclosure of passwords and total bypassing of authentication. In fact, we’re getting new Link: https://x.com/LundukeJournal/status/1988346904581726501

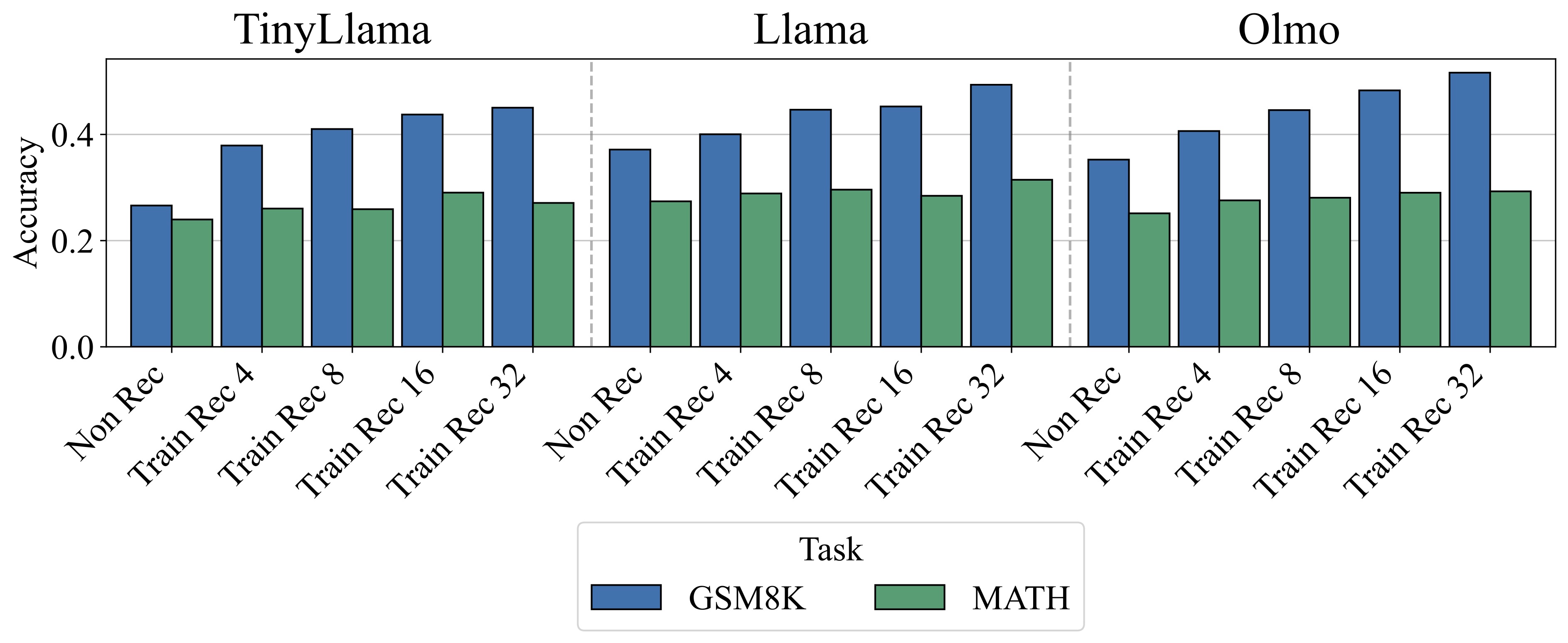

RT Micah Goldblum 🚨We converted pretrained LLMs into looped LLMs that can crank up performance by looping for more iterations. Our looped models surpass the performance of the pretrained models we started out with, showing that existing models benefit from increased computational depth. 📜1/9

RT Sully noticed a pretty worrying trend the more i use llms my day to day skills are slowly atrophying and im relying more and more on models for even simple tasks happening for coding, writing etc sometimes i dont even want to do the task if theres no ai to help

RT Rachel Thomas Re "People who go all in on AI agents now are guaranteeing their obsolescence. If you outsource all your thinking to computers, you stop upskilling, learning, and becoming more competent. AI is great at helping you learn." @jeremyphoward @NVIDIAAI https://www.youtube.com/watch?v=zDkHJDgefyk 2/

RT Rachel Thomas TensorFlow was all about making it easier for computers. PyTorch *won* because it was about making it easier for humans. It’s disappointing to see AI community focusing on what’s easiest for machines again (prioritizing AI agents & not centering humans). -- @jeremyphoward 1/

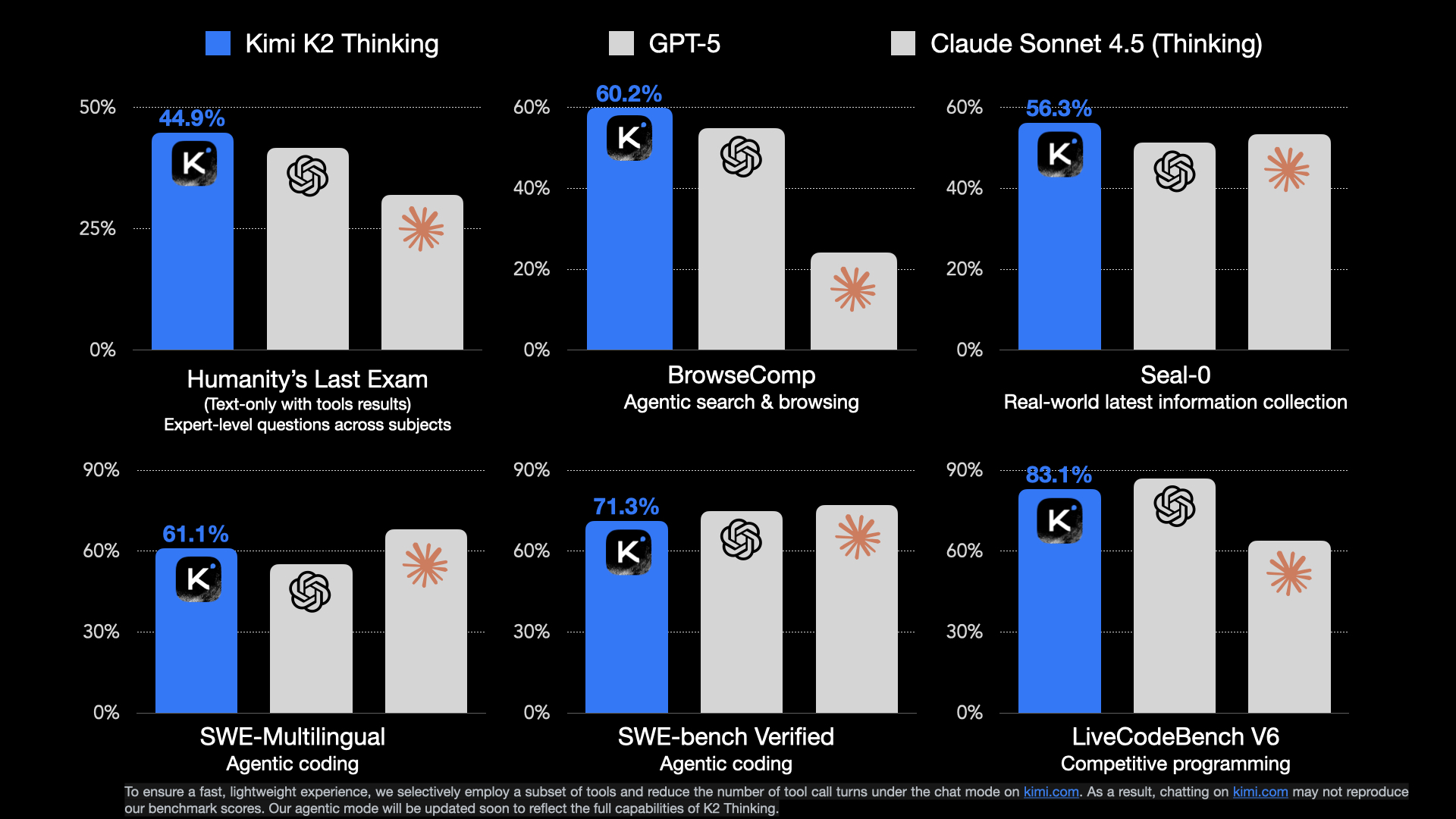

Interesting bot account this one. Check out the posting history. Wonder who is organizing this, and why.Michael: @jeremyphoward @Kimi_Moonshot Don’t fall for China propaganda. You don’t need H100s when your model’s trained on distilled U.S. knowledge — cheap A800s, H800s, even RTX 4090s can handle that just fine using plain old BF16 ops. But when that distilled-knowledge faucet closes in 2026, what then? Link: https://x.com/letsgomike888/status/1987675934548471993

RT 張小珺 Xiaojùn If you are interested in Kimi K2 thinking, you can check out this interview with Yang Zhilin, founder of Kimi (with Chinese and English bilingual subtitles): https://youtu.be/91fmhAnECVc?si=AKNvfeNvxvfYF7fF

RT Shekswess It’s frustrating how labs like @Kimi_Moonshot, @Alibaba_Qwen, @deepseek_ai, @allen_ai, @huggingface... share their research, pipelines, and lessons openly, only for closed-source labs to quietly use that knowledge to build better models without ever giving back.

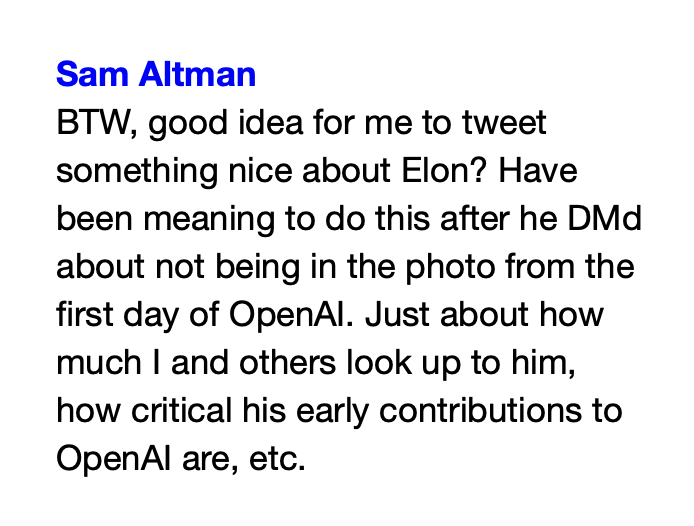

RT “paula” the tweetInternal Tech Emails: Sam Altman texts Shivon Zilis February 9, 2023 Link: https://x.com/TechEmails/status/1987199248732180862

The really funny part is he plagiarized the image in the first placeAcer: no because most mathematicians don’t actually care to evaluate integrals and those that do are basically almost always analytic number theorists, and they usually almost always just need to bound them. Keep doing your calculus homework bud. Link: https://x.com/AcerFur/status/1987023190036480180

RT Lucas Beyer (bl16) There's no better feeling than coming up with a simpler solution. And then an even simpler one. And then an EVEN SIMPLER one. I love it. It's also the hardest thing to do in programming, because over-complicating things is so so easy, I see it happen everywhere all the time.

RT Crystal I'm so proud!! The open-source trillion parameters reasoning model <3 > SOTA on HLE (44.9%) and BrowseComp (60.2%)Kimi.ai: 🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built Link: https://x.com/Kimi_Moonshot/status/1986449512538513505

A Guide to Solveit Features

An overview of the features of the Solveit platform, which is designed to make exploration and iterative development easier and faster.

RT Stefan Schubert LolPaul Novosad: What happens when online job applicants start using LLMs? It ain't good. 1. Pre-LLM, cover letter quality predicts your work quality, and a good cover gets you a job 2. LLMs wipe out the signal, and employer demand falls 3. Model suggests high ability workers lose the most 1/n Link: https://x.com/paulnovosad/status/1985794453576221085

RT Joseph Redmon I’m working on a new thing, we’re so back…Ai2: Introducing OlmoEarth 🌍, state-of-the-art AI foundation models paired with ready-to-use open infrastructure to turn Earth data into clear, up-to-date insights within hours—not years. Link: https://x.com/allen_ai/status/1985719070407176577

RT GPU MODE If you'd like to win your own Dell Pro Max with GB300 we're launching a new kernel competition with @NVIDIAAI @sestercegroup @Dell to optimize NVF4 kernels on B200 2025 has seen a tremendous rise of pythonic kernel DSLs, we got on-prem hardware to have reliable ncu benchmarking available to all and we hope the best kernel DSL and the best kernel DSL author win

Let’s Build the GPT Tokenizer: A Complete Guide to Tokenization in LLMs

A text and code version of Karpathy’s famous tokenizer video.

How to Solve it With Code course now available

An email sent to all fast.ai forum users.

fasttransform: Reversible Pipelines Made Simple

Introducing fasttransform, a Python library that makes data transformations reversible and extensible through the power of multiple dispatch.

What AI can tell us about microscope slides

A friendly introduction to Foundation Models for Computational Pathology

A New Chapter for fast.ai: How To Solve It With Code

fast.ai is joining Answer.AI, and we’re announcing a new kind of educational experience, ‘How To Solve It With Code’

In defense of screen time

Pundits say my husband and I are parenting wrong.

A new old kind of R&D lab

Answer.AI is a new kind of AI R&D lab which creates practical end-user products based on foundational research breakthroughs.

Can LLMs learn from a single example?

We’ve noticed an unusual training pattern in fine-tuning LLMs. At first we thought it’s a bug, but now we think it shows LLMs can learn effectively from a single example.

AI and Power: The Ethical Challenges of Automation, Centralization, and Scale

Moving AI ethics beyond explainability and fairness to empowerment and justice

AI Safety and the Age of Dislightenment

Model licensing & surveillance will likely be counterproductive by concentrating power in unsustainable ways

Is Avoiding Extinction from AI Really an Urgent Priority?

The history of technology suggests that the greatest risks come not from the tech, but from the people who control it

Mojo may be the biggest programming language advance in decades

Mojo is a new programming language, based on Python, which fixes Python’s performance and deployment problems.

From Deep Learning Foundations to Stable Diffusion

We’ve released our new course with over 30 hours of video content.

GPT 4 and the Uncharted Territories of Language

Language is a source of limitation and liberation. GPT 4 pushes this idea to the extreme by giving us access to unlimited language.

I was an AI researcher. Now, I am an immunology student.

Last year, I became captivated by a new topic in a way that I hadn’t felt since I first discovered machine learning

1st Two Lessons of From Deep Learning Foundations to Stable Diffusion

4 videos from Practical Deep Learning for Coders Part 2, 2022 have been released as a special early preview of the new course.

Deep Learning Foundations Signup, Open Source Scholarships, & More

Signups are now open for Practical Deep Learning for Coders Part 2, 2022. Scholarships are available for fast.ai community contributors, open source developers, and diversity scholars.

From Deep Learning Foundations to Stable Diffusion

Practical Deep Learning for Coders part 2, 2022