Georgi Gerganov

简介

24th at the Electrica puzzle challenge | https://t.co/baTQS2bdia

平台

内容历史

RT Jeff Geerling Just tried out the new built-in WebUI feature of llama.cpp and it couldn't be easier. Just start llama-server with a host and port, and voila!

RT Georgi Gerganov Initial M5 Neural Accelerators support in llama.cpp Enjoy faster TTFT in all ggml-based software (requires macOS Tahoe 26) https://github.com/ggml-org/llama.cpp/pull/16634

Initial M5 Neural Accelerators support in llama.cpp Enjoy faster TTFT in all ggml-based software (requires macOS Tahoe 26) https://github.com/ggml-org/llama.cpp/pull/16634

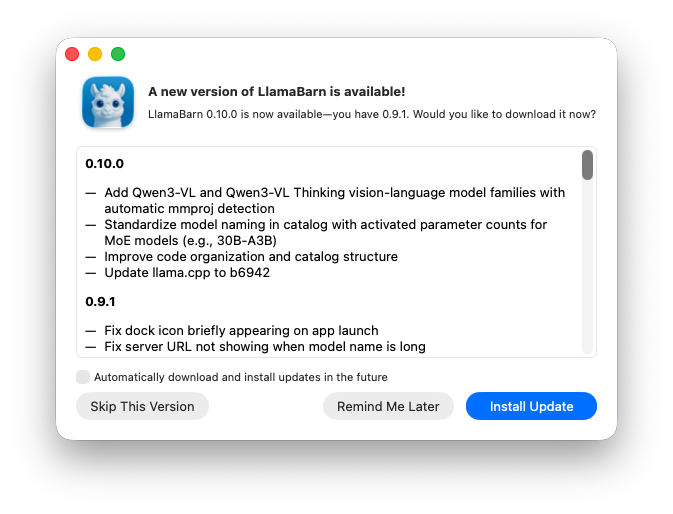

RT Emanuil Rusev Re @fishright @ggerganov Just pushed a fix for this — this is what first launch is going to look like in the next version.

RT clem 🤗 When you run AI on your device, it is more efficient and less big brother and free! So it's very cool to see the new llama.cpp UI, a chatgpt-like app that fully runs on your laptop without needing wifi or sending any data external to any API. It supports: - 150,000+ GGUF models - Drop in PDFs, images, or text documents - Branch and edit conversations anytime - Parallel chats and image processing - Math and code rendering - Constrained generation with JSON schema supported Well done @ggerganov and team!

RT yags llama.cpp developers and community came together in a really impressive way to implement Qwen3-VL models. Check out the PRs, it’s so cool to see the collaboration that went into getting this done. Standard formats like GGUF, combined with mainline llama.cpp support ensures the models you download will work anywhere you choose to run them. This protects you from getting unwittingly locked into niche providers’ custom implementations that won’t run outside their platforms.Qwen: 🎉 Qwen3-VL is now available on llama.cpp! Run this powerful vision-language model directly on your personal devices—fully supported on CPU, CUDA, Metal, Vulkan, and other backends. We’ve also released GGUF weights for all variants—from 2B up to 235B. Download and enjoy! 🚀 🤗 Link: https://x.com/Alibaba_Qwen/status/1984634293004747252

RT Qwen 🎉 Qwen3-VL is now available on llama.cpp! Run this powerful vision-language model directly on your personal devices—fully supported on CPU, CUDA, Metal, Vulkan, and other backends. We’ve also released GGUF weights for all variants—from 2B up to 235B. Download and enjoy! 🚀 🤗 Hugging Face: https://huggingface.co/collections/Qwen/qwen3-vl 🤖 ModelScope: https://modelscope.cn/collections/Qwen3-VL-5c7a94c8cb144b 📌 PR: https://github.com/ggerganov/llama.cpp/pull/16780

RT Vaibhav (VB) Srivastav BOOM: We've just re-launched HuggingChat v2 💬 - 115 open source models in a single interface is stronger than ChatGPT 🔥 Introducing: HuggingChat Omni 💫 > Select the best model for every prompt automatically 🚀 > Automatic model selection for your queries > 115 models available across 15 providers including @GroqInc, @CerebrasSystems, @togethercompute, @novita_labs, and more Powered by HF Inference Providers — access hundreds of AI models using only world-class inference providers Omni uses a policy-based approach to model selection (after experimenting with different methods). Credits to @katanemo_ for their small routing model: katanemo/Arch-Router-1.5B Coming next: • MCP support with web search • File support • Omni routing selection improvements • Customizable policies Try it out today at hf[dot] co/chat 🤗

simpleDavid Finsterwalder | eu/acc: Important info. The issue in that benchmark seems to be ollama. Native llama.cpp works much better. Not sure how ollama can fail so hard to wrap llama.cpp. The lesson: Don’t use ollama. Espacially not for benchmarks. Link: https://x.com/DFinsterwalder/status/1978372050239516989