Jeremy Howard

简介

🇦🇺 Co-founder: @AnswerDotAI & @FastDotAI ; Prev: professor @ UQ; Stanford fellow; @kaggle president; @fastmail/@enlitic/etc founder https://t.co/16UBFTX7mo

平台

内容历史

RT Leon Lang I understand that OpenAI employees might have been reassured by Sam’s tweet from yesterday. But this blogpost is so blatantly deceptive that I can’t grasp how you can have any other reaction than to be ashamed to work for your company. Original tweet: https://x.com/Lang__Leon/status/2027903509081821208

For those following the DoW AI drama, I highly recommend reading this post explaining how @OpenAI approached the negotiations with the DoW.

RT Andreas Kirsch 🇺🇦 I'm speechless at OpenAI releasing that contract excerpt and acting as if there aren't gaping holes that could be exploited far beyond their stated "red lines." I'm not a lawyer, but this is pretty obvious and common sense. (And to be clear: if Google had signed the same deal, I'd be saying the same thing internally. The issues here are bigger than friendly competition between companies.) OpenAI's "red lines" are: no mass domestic surveillance, no directing autonomous weapons, and no high-stakes automated decisions. They argue their cloud-only deployment + safety stack + cleared OpenAI personnel "in the loop" make violations impossible. They also claim the contract references the relevant laws/policies "as they exist today" so future changes won't weaken the standards. But the actual language they published is still full of obvious escape hatches. This is why Anthropic refusing to sign makes sense. Reporting on the Anthropic–"DoW"/Pentagon standoff described them saying the proposed contract language was framed as compromise but paired with "legalese that would allow safeguards to be disregarded at will." You don't need to agree with Anthropic on everything to see what they're reacting to: language that sounds like ethics but cashes out as essentially "subject to whatever the government decides later." ## Autonomous weapons The problem is that the restriction is conditional: it depends on what "law/regulation/policy requires human control" for. If policy definitions are weak (or later revised), the contract language itself doesn't read like a durable "no autonomous weapons" ban. It reads like "we'll follow whatever the current regime says requires human control." OpenAI says elsewhere that the agreement "locks in" today's standards even if laws/policies change. If that "freeze" clause is real and enforceable, sure, but it's not visible in the excerpt itself, so the excerpt alone doesn't justify the level of confidence they're projecting...

RT Miles Brundage In light of what external lawyers and the Pentagon are saying, OpenAI employees’ default assumption here should unfortunately be that OpenAI caved + framed it as not caving, and screwed Anthropic while framing it as helping them. Hope that is wrong + they get evidence otherwise Original tweet: https://x.com/Miles_Brundage/status/2027768822372135318

RT Xeophon If you are an (AI) researcher, it’s crucial to think about the implications about your research. I think this post from @giffmana is really thought provoking: Original tweet: https://x.com/xeophon/status/2027761750930567390

RT pamela mishkin the wildest part? If OAI actually wanted the redlines, they had the leverage to get them! pentagon not going to declare a SECOND merican AI company a supply chain risk, could have held the line and forced real concessions and safety! Original tweet: https://x.com/manlikemishap/status/2027751243263705274

Not just did OpenAI defect and concede to this whole authoritarian maneuver, but Sam also went and just deceptively framed the whole thing to try to make it look like they had agreed to the same Anthropic redlines, which is not actually true. https://x.com/_NathanCalvin/status/2027597992195195234?s=20

View quoted postRT Bun am i a supply chain risk now??? Original tweet: https://x.com/bunjavascript/status/2027638567317737895

RT Nathan Lambert Every Anthropic employee proudly amplifying their company comms and 0 supporting Sama’s weird scooping up of the DoW contract is pretty telling. Original tweet: https://x.com/natolambert/status/2027595909299900482

RT Anthropic A statement on the comments from Secretary of War Pete Hegseth. https://anthropic.com/news/statement-comments-secretary-war Original tweet: https://x.com/AnthropicAI/status/2027555481699446918

RT Dean W. Ball Think about the power Hegseth is asserting here. He is claiming that the DoD can force all contractors to stop doing business of any kind with arbitrary other companies. In other words, every operating system vendor, every manufacturer of hardware, every hyperscaler, every type of firm the DoD contracts with—all their services and products can be denied to any economic actor at will by the Secretary of War. This is obviously a psychotic power grab. It is almost surely illegal, but the message it sends is that the United States Government is a completely unreliable partner for any kind of business. The damage done to our business environment is profound. No amount of deregulatory vibes sent by this administration matters compared to this arson. Original tweet: https://x.com/deanwball/status/2027521251263000765

This week, Anthropic delivered a master class in arrogance and betrayal as well as a textbook case of how not to do business with the United States Government or the Pentagon. Our position has never wavered and will never waver: the Department of War must have full, unrestricted

View quoted postRT Ronan Collobert 26 years ago I created OG Torch. Now I am on X. Samy: whhhatt, you want to call it Torche? Me: yes, why not? Samy: but people will know what it means in French? <after 1 day of name brainstorming> Samy: ok, remove the "e", let's call it Torch. I think it was a good name. Original tweet: https://x.com/trebolloc/status/2027433605161742811

RT Anthropic A statement from Anthropic CEO, Dario Amodei, on our discussions with the Department of War. https://www.anthropic.com/news/statement-department-of-war Original tweet: https://x.com/AnthropicAI/status/2027150818575528261

RT David Gwyer - AI Evals & RAG | ML Engineer I just created a Chrome extension to turn SolveIt (by @answerdotai, @jeremyphoward) into an interactive voice driven environment, and the AI also talks back to you! 🤯 Here is a demo of the extension, a back-and-forth quiz about snooker. 🙂 Original tweet: https://x.com/dgwyer/status/2027058892648169528

RT Guillermo Rauch We've identified, responsibly disclosed, and confirmed 2 critical, 2 high, 2 medium, 1 low security vulnerabilities in Cloudflare's vibe-coded framework Vinext. We believe the security of the internet is the highest priority, especially in the age of AI. Vibe coding is a useful tool, especially when used responsibly. Our security research and framework teams are extending their help and expertise to Cloudflare in the interest of the public internet's security. Original tweet: https://x.com/rauchg/status/2026864132423823499

Politics and organizational behavior have always been the *most* important thing to consider and understand when working on AI risks. But nearly the whole "alignment" world has focused only on *technical* risks (i.e "what if AI wants to turn us into paperclips).

did lesswrong ever predict that the first big challenge to alignment would be "the us government puts a gun to your head and tells you to turn off alignment"

View quoted postRT Anirudh Goyal Yes! DP → Batch Sharding TP → Intra-layer Sharding PP → Layer Sharding EP → Expert Sharding Original tweet: https://x.com/anirudhg9119/status/2026727785431994461

A bit polarizing comment: its too late but I kind of think whoever named TP DP EP combinations probably slowed down progress by inventing terminology thats borderline absurd to describe basic sharding

View quoted postRT You Jiacheng it's so over? Original tweet: https://x.com/YouJiacheng/status/2026680139929809267

RT Qwen 🚀 Introducing the Qwen 3.5 Medium Model Series Qwen3.5-Flash · Qwen3.5-35B-A3B · Qwen3.5-122B-A10B · Qwen3.5-27B ✨ More intelligence, less compute. • Qwen3.5-35B-A3B now surpasses Qwen3-235B-A22B-2507 and Qwen3-VL-235B-A22B — a reminder that better architecture, data quality, and RL can move intelligence forward, not just bigger parameter counts. • Qwen3.5-122B-A10B and 27B continue narrowing the gap between medium-sized and frontier models — especially in more complex agent scenarios. • Qwen3.5-Flash is the hosted production version aligned with 35B-A3B, featuring: – 1M context length by default – Official built-in tools 🔗 Hugging Face: https://huggingface.co/collections/Qwen/qwen35 🔗 ModelScope: https://modelscope.cn/collections/Qwen/Qwen35 🔗 Qwen3.5-Flash API: https://modelstudio.console.alibabacloud.com/ap-southeast-1/?tab=doc#/doc/?type=model&url=2840914_2&modelId=group-qwen3.5-flash Try in Qwen Chat 👇 Flash: https://chat.qwen.ai/?models=qwen3.5-flash 27B: https://chat.qwen.ai/?models=qwen3.5-27b 35B-A3B: https://chat.qwen.ai/?models=qwen3.5-35b-a3b 122B-A10B: https://chat.qwen.ai/?models=qwen3.5-122b-a10b Would love to hear what you build with it. Original tweet: https://x.com/Alibaba_Qwen/status/2026339351530188939

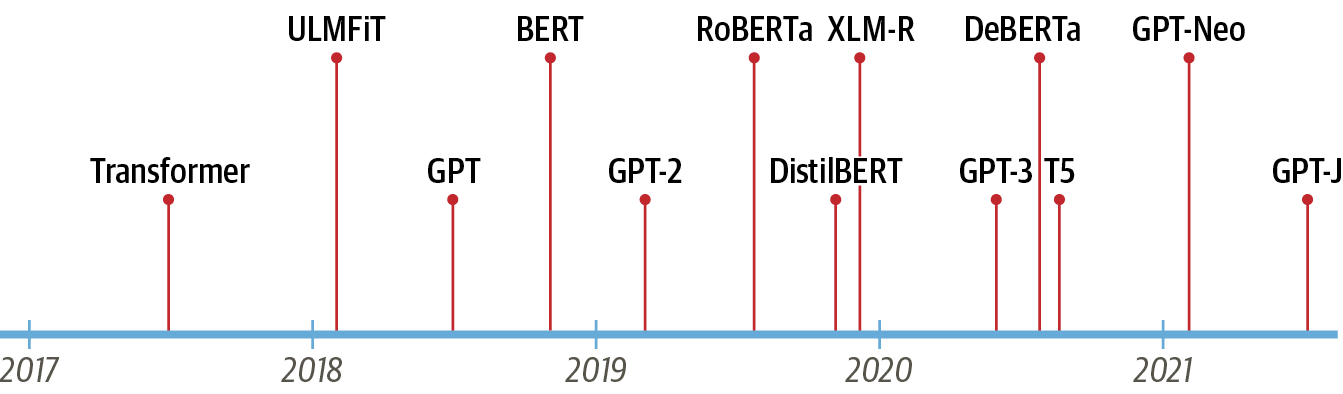

An enormous amount of the work in all commercial AI labs comes from open source software. E.g the original LLM, ULMFiT, was open source, and led to the creation of GPT at OpenAI, where the Anthropic founding team was working at the time. Innovation happens through open research

I can finally publicly state one reason I’ve not been bullish on open source catching up and overtaking the frontier labs: we observed several of the top open source models distilling from Claude. Leapfrogging happens through innovation, not distillation.

View quoted postAs Piotr explains, this is a nasty and unexpected example of @simonw's "lethal trifecta":@simonw "It scares me a bit, when I see how bug free the code looks like." Me too! 😮

RT Chris Lattner The Claude C Compiler is the first AI-generated compiler that builds complex C code, built by @AnthropicAI. Reactions ranged from dismissal as "AI nonsense" to "SW is over": both takes miss the point. As a compiler🐉 expert and experienced SW leader, I see a lot to learn: 👇 Original tweet: https://x.com/clattner_llvm/status/2024564314347360272

RT Modular Mojo in Jupyter is here 🙌 @jeremyphoward released a new Jupyter kernel that lets you run Mojo directly in notebooks. It works great on macOS, supports recent Linux versions, and is easy to install via pip or uv. Give it a try and let us know what you build! https://github.com/AnswerDotAI/mojokernel #MojoLang #OpenSource #DeveloperTools Original tweet: https://x.com/Modular/status/2024198871875014815

RT Mark Cuban There are generally 2 types of LLM users, those that use it to learn everything , and those that use it so they don’t have to learn anything. Original tweet: https://x.com/mcuban/status/2023750950322889050

RT Frank Yan As promised, here's the short film Jia Zhangke produced using Seedance 2.0 for Chinese New Year and his take on AI filmmaking Original tweet: https://x.com/FrankYan2/status/2023257752017981446

All 3 points here are wrong BTW: ❌ ARC-AGI came out 2 years ago ❌ It was declared "impossible for AI" ❌ beaten within the year, so they made ARC-AGI-2. Fact checking should occur *prior* to posting.

i don't expect AI skeptics to be moved by a single result, but it seems notable that we keep making harder tests for AI models and they keep beating them. (ARC-AGI came out 2 years ago and was declared "impossible for AI," then got beaten within the year, so they made

View quoted postI was planning this morning when I got up to point out that Andrej had failed to take advantage of the beautiful consistency of the Vector-Jacobian product (VJP) and had wasted loads of lines. Woke up to discover he's already figured it out! His diff is worth studying:

New art project. Train and inference GPT in 243 lines of pure, dependency-free Python. This is the *full* algorithmic content of what is needed. Everything else is just for efficiency. I cannot simplify this any further. https://gist.github.com/karpathy/8627fe009c40f57531cb18360106ce95

View quoted postRT Jeff Clune Can AI agents design better memory mechanisms for themselves? Introducing Learning to Continually Learn via Meta-learning Memory Designs. A meta agent automatically designs memory mechanisms, including what info to store, how to retrieve it, and how to update it, enabling agentic systems to continually learn across diverse domains. Led by @yimingxiong_ with @shengranhu 🧵👇 1/ Original tweet: https://x.com/jeffclune/status/2021242681826095179

RT bora New NanoTabPFN training speed record: Beating RF in 10.10 minutes Previous record: 54.41 minutes Changelog: - Scaled Dot-Product Attention rewrite with explicit QKV - Pre-norm transformer blocks - bfloat16 autocast - Increase learning rate - Increase embedding size - Reduce attention heads This record is by @carterprince03 Original tweet: https://x.com/boratwits/status/2020943088428917240

RT Chenwei Cui Introducing Multi-Head LatentMoE 🚀 Turns out, making NVIDIA's LatentMoE [1] multi-head further unlocks O(1), balanced, and deterministic communication. Our insight: Head Parallel; Move routing from before all-to-all to after. Token duplication happens locally. Always uniform, always deterministic. It works orthogonally to EP as a new dimension of parallelism. For example, use HP for intra-cluster all-to-all as a highway, then use EP locally. We propose FlashAttention-like routing and expert computation, both exact, IO-aware, and constant memory. This is to handle the increased number of sub-tokens. Results: - We replicate LatentMoE and confirm it is indeed faster than MoE, with matching model performance. (See Design Principle IV in [1]) - Up to 1.61x faster training than MoE+EP with identical model performance. - Higher model performance while still 1.11x faster with doubled granularity. 📄 Paper: http://arxiv.org/abs/2602.04870v1 💻 Code: http://github.com/kerner-lab/Sparse-GPT-Pretraining [1] Elango et al., "LatentMoE: Toward Optimal Accuracy per FLOP and Parameter in Mixture of Experts", 2026. http://arxiv.org/abs/2601.18089 Original tweet: https://x.com/ccui42/status/2020368208197566904

![RT Chenwei Cui

Introducing Multi-Head LatentMoE 🚀

Turns out, making NVIDIA's LatentMoE [1] multi-head further unlocks O(1), balanced, and deterministic communication.

Our insight: Head Parallel;...](https://pbs.twimg.com/media/HAnJgGoacAMXAB_?format=jpg&name=orig)

Did you know that, with ghostty, you can use kitty graphics inside tmux? Amazing work from @mitchellh!

RT Logan Graham I'm hiring for a new, very cool role: a Research Scientist to lead Emerging Risks. By Emerging Risks, I mean all the ways the humanity <> AGI interface will be weird. Not catastrophic risks, but more agent businesses / monitoring / AI with power. Original tweet: https://x.com/logangraham/status/2019911162960269678

RT Jared Palmer Stacked Diffs on @GitHub will start rolling out to early design partners in an alpha next month. In the meantime, here's video of our progress so far: (h/t for @georgebrock + team for their awesome work) Original tweet: https://x.com/jaredpalmer/status/2019817235163074881

RT Graham Neubig It is very impressive that Opus 4.6 built a working C compiler in 2 weeks, using agent teams and burning $20k worth of tokens. It's also impressive that @rui314 built one by himself in 7-or-so days worth of commits 😀 https://github.com/rui314/chibicc Original tweet: https://x.com/gneubig/status/2019585049860145400

New Engineering blog: We tasked Opus 4.6 using agent teams to build a C compiler. Then we (mostly) walked away. Two weeks later, it worked on the Linux kernel. Here's what it taught us about the future of autonomous software development. Read more: https://www.anthropic.com/engineering/building-c-compiler

View quoted postRT Benjamin Marie The KV cache size of Qwen3 Coder Next is very small: 6.0 GB at 262,144 tokens Don't quantize this KV cache. For comparison, for the same number of tokens, Qwen3 0.6B's KV cache consumes 28 GB of memory Original tweet: https://x.com/bnjmn_marie/status/2018969422531285360

RT Damien Teney Re @PetarV_93 @fedzbar @ccperivol @sindero "In this paper, we provide novel evidence that perplexity should not be blindly trusted as a model selection objective." Like this? 🤔⬇️ https://x.com/jeremyphoward/status/1881000354923544757 Original tweet: https://x.com/DamienTeney/status/2018413621361967216

Folks seem to rediscover this every couple of years. As I’ve been saying for many years, you have to track token accuracy, not loss/perplexity, otherwise you’ll wrongly think the validation loss going up is a bad thing.

View quoted postRT trieu Mathematicians 🤝AI researchers https://arxiv.org/abs/2601.22401. Our take on AI solving Erdos problems: * Many "Open" problems are actually just obscure: many cases the AI didn't find something new, only rediscovered solutions buried in the literature. We present our systematic approach to reporting AI results on Erdos. * The real bottleneck is still human labor, e.g. we spent lots of time filtering out technically correct but meaningless solutions (AI missed Erdos’s original intent). * Acceleration in solving low-hanging fruits is real, but we also need to highlight the many more misses that require human auditing. Clear research directions ahead though, and we feel optimistic about drastically increasing the signal-to-noise ratio. More to come! Original tweet: https://x.com/thtrieu_/status/2018356459239870790

Here's the paper link to our scaled effort for tackling Erdős problems. We started with 700 problems marked ‘Open’ in the database. Our agent #Aletheia identified potential solutions to 200 problems. Initial human grading revealed 63 correct answers, followed by deep expert

My home town. It's true, Australia is home to some dangerous wildlife.

Bad: getting kicked out of a club with your boy Worse: your buddy throwing a chair because he’s mad & it hits you instead of the security guard

View quoted postRT Lex Fridman Here's my conversation all about AI in 2026, including technical breakthroughs, scaling laws, closed & open LLMs, programming & dev tooling (Claude Code, Cursor, etc), China vs US competition, training pipeline details (pre-, mid-, post-training), rapid evolution of LLMs, work culture, diffusion, robotics, tool use, compute (GPUs, TPUs, clusters), continual learning, long context, AGI timelines (including how stuff might go wrong), advice for beginners, education, a LOT of discussion about the future, and other topics. It's a great honor and pleasure for me to be able to do this kind of episode with two of my favorite people in the AI community: 1. Sebastian Raschka (@rasbt) 2. Nathan Lambert (@natolambert) They are both widely-respected machine learning researchers & engineers who also happen to be great communicators, educators, writers, and X posters. This was a whirlwind conversation: everything from the super-technical to the super-fun. It's here on X in full and is up everywhere else (see comment). Timestamps: 0:00 - Introduction 1:57 - China vs US: Who wins the AI race? 10:38 - ChatGPT vs Claude vs Gemini vs Grok: Who is winning? 21:38 - Best AI for coding 28:29 - Open Source vs Closed Source LLMs 40:08 - Transformers: Evolution of LLMs since 2019 48:05 - AI Scaling Laws: Are they dead or still holding? 1:04:12 - How AI is trained: Pre-training, Mid-training, and Post-training 1:37:18 - Post-training explained: Exciting new research directions in LLMs 1:58:11 - Advice for beginners on how to get into AI development & research 2:21:03 - Work culture in AI (72+ hour weeks) 2:24:49 - Silicon Valley bubble 2:28:46 - Text diffusion models and other new research directions 2:34:28 - Tool use 2:38:44 - Continual learning 2:44:06 - Long context 2:50:21 - Robotics 2:59:31 - Timeline to AGI 3:06:47 - Will AI replace programmers? 3:25:18 - Is the dream of AGI dying? 3:32:07 - How AI will make money? 3:36:29 - Big acquisitions in 2026 3:41:01 - Future...

🤷

I still think about the version of me who could solve these in one sitting.

RT Mario Zechner Re but if you take 15 minutes to scan the codebase, you'll find that the clankers keep building abstractions upon abstractions and duplicate functionality all over the place, precisely because they can no longer grasp the project in full any longer. both claude and codex suffer from this. it's basically impossible to make changes and know their effects on the whole. testing is "does it compile" and "does it reply". the test suite is largely performative. no shade on Peter here, obvl., he's a machine and did his best to deal with the influx of *everything*. clawdbot works, for some values of "works". but I don't think it's a good idea to oerpetuate this workflow as a working approach. the only way to keep things from exploding is human intervention, and it's a stressful never ending struggle, because you've essentially lost all control. we aren't there yet. Original tweet: https://x.com/badlogicgames/status/2017613815592964228

RT Mario Zechner it's funny, because yesterday, that same master clanker stomped all over the large clawdbot codebase, reverting fixes, breaking CI, because it can no longer grasp how stuff fits together. and nobody would have noticed. entirely fine in the clawdbot context, anything goes. but eventually, we need to stop kidding ourselves and realize, that the "engineering" part in SWE is still a thing, and the only thing that keeps our industry from imploding on itself when everyone and their mom gives in to the clankers 100%. Original tweet: https://x.com/badlogicgames/status/2017611753752850708

Clawdbot creator, Peter Steinberger says Opus is the best model overall, but Codex is his go-to for coding. He trusts Codex to handle big codebases with almost no mistakes. It’s more reliable and needs less handholding, which makes him faster. Claude Code can work too, but it

View quoted postllms.txt - the agents file loved by agents 😘u/Alfred_the_Butler says: "llms.txt as a standard convention makes interop easier"

RT Neil Rathi New paper, w/@AlecRad Models acquire a lot of capabilities during pretraining. We show that we can precisely shape what they learn simply by filtering their training data at the token level. Original tweet: https://x.com/neil_rathi/status/2017286042370683336

You can't use the "that won't age well" argument on something that has, in fact, aged well.

With the release of nbdev3 yesterday, now's a great time to discover why YOU should be using nbdev - and there's no better tldr than this thread that introduced nbdev2 in 2022, so take a look!: https://x.com/jeremyphoward/status/1552804293572722688

Our biggest launch in years: nbdev2, now boosted with the power of @quarto_pub! Use @ProjectJupyter to build reliable and delightful software fast. A single notebook creates a python module, tests, @github Actions CI, @pypi/@anacondainc packages, & more https://www.fast.ai/2022/07/28/nbdev-v2/

View quoted postIf you're wondering why LLMs haven't done any independent breakthrough scientific research yet, I explained *18 months ago* why that's not gonna happen (unless there's a major change to how LLMs work):

For those that hope (or worry) that LLMs will do breakthrough scientific research, I've got good (or bad) news: LLMs are particularly, exceedingly, marvellously ill-suited to this task. (if you're a researcher, you'll have noticed this already) Here's why🧵

View quoted postThis is the guy that created XLNet BTW. I've always felt that model was extremely under-rated - it's super impressive. Not at all surprised he's gone on to such great things. https://arxiv.org/abs/1906.08237

Here's a short video from our founder, Zhilin Yang. (It's his first time speaking on camera like this, and he really wanted to share Kimi K2.5 with you!)

View quoted postI love this article so much. Rachel has done a deep dive into the psychology of vibe coding, & discovered underlying reasons why it's tripping so many people up psychologically (even whilst it can be helpful). Read it, so you know what to watch out for: https://www.fast.ai/posts/2026-01-28-dark-flow/

Vibe coding is the creation of large quantities of complex AI-generated code. Executives push lay-offs claiming AI can handle the work. Managers pressure employees to meet quotas of how much of their code must be AI-generated... yet results are far from what was promised 1/

RT Eric Ries Six months of salary. That's what Matt Mullenweg offered every Automattic employee to resign if they didn't believe in the company's direction. About 9% took the offer and left. Original tweet: https://x.com/ericries/status/2014831030285533298

We don't YOLO on top of microcode. There's many layers of increasingly deep abstraction on top of it, each depending on the layer below. Each is carefully tested and has well defined boundaries. That's why you only normally need understand the code at the layer you're using.

@itsandrewgao How much do you understand of the microcode that is running inside your CPU or GPU and is actually doing the work you want it to do? How much do you understand of the actual kernel and hardware drivers? The firmware on your SSD? You just YOLO your stuff on top of it and hope

View quoted postRT Salman // 萨尔曼 I built a very minimal version of SolveIt in SolveIt (developed by @jeremyphoward and his team @answerdotai), based on the techniques taught in the SolveIt course. Original tweet: https://x.com/ForBo7_/status/2014157730873839922

RT Oz I'm studying immunobiology, and really enjoying the workflow of asking an LLM to design programming challenges for me. So for instance today I had to scan bacterial genomes for TLR9 ligands, which taught me a lot more than if I'd just read about this stuff in Janeway Original tweet: https://x.com/oznova_/status/2013802010739646930

RT Rachel Thomas Using LLMs for close reading provides a way to ask questions, explore rabbit holes, personalize, & build intuition Two examples in 🧵: - @jeremyphoward reads chapter of @ericries's new book (Incorruptible) - @johnowhitaker reads a dense machine learning paper (LeJEPA) 1/ Original tweet: https://x.com/math_rachel/status/2013755596378644880

RT Larry Dial New NanoGPT Speedrun WR at 99.3s (-5.6s) with a bigram hash embedding that is added to the residual stream before every layer. Inspiration from Svenstrup et al 2017 paper on Hash Embeddings, and Deepseek's Engram. Modded-NanoGPT now uses fewer training tokens than its parameter count, a radical divergence from the 20x Chinchilla ratio. https://github.com/KellerJordan/modded-nanogpt/pull/201 Original tweet: https://x.com/classiclarryd/status/2013520088297558274

RT Z.ai Introducing GLM-4.7-Flash: Your local coding and agentic assistant. Setting a new standard for the 30B class, GLM-4.7-Flash balances high performance with efficiency, making it the perfect lightweight deployment option. Beyond coding, it is also recommended for creative writing, translation, long-context tasks, and roleplay. Weights: http://huggingface.co/zai-org/GLM-4.7-Flash API: http://docs.z.ai/guides/overview/pricing - GLM-4.7-Flash: Free (1 concurrency) - GLM-4.7-FlashX: High-Speed and Affordable Original tweet: https://x.com/Zai_org/status/2013261304060866758

RT Kevin Gray A lot don't realize this but you can use TinyJit from @__tinygrad__ to execute python code at ludicrous speeds on CPU, WebGPU, Metal, OpenCL, Cuda, and even more hardware. So in my case I'm writing a software rasterizer for RL with tinygrad. Pretty cool I think. Original tweet: https://x.com/graykevinb/status/2012708863250780666

RT himanshu wait this is actually big. this deepseek research used LogitLens (lets you see what the model is 'thinking' at each layer) and CKA (compares what different layers are actually learning) to figure out why the new Engram architecture works. apparently this is the first time i have seen mech interpretability research being used in a capabilities paper. feels like a shift. Original tweet: https://x.com/himanshustwts/status/2012470011798155686

DeepSeek is back! "Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models" They introduce Engram, a module that adds an O(1) lookup-style memory based on modernized hashed N-gram embeddings Mechanistic analysis suggests Engram reduces the need

The answer turns out to be "yes, kinda". After spending few minutes clicking "like" on posts I liked and "show less like this" on those I don't, here's what my feed now contains.

RT Ritchie Vink So there was quite a sensational rant post titling "DuckDB beats Polars for 1TB of data" and the video "Polars Got Destroyed by DuckDB in this 1TB Test" that was shared a lot. There was no code shared for Polars and upon request, we were ignored. These posts were conveniently shared in posts and newsletters because they fit a narrative. In any case, I went through the effort to reproduce the dataset and run the exact benchmark. The post mentioned 64GB RAM, so I ran on a 5a.8xlarge (32vCPU / 64GB RAM). Polars did not go OOM, but finished the query in 14 minutes never exceeding 14GB RAM usage. On the same machine DuckDB also took 14 minutes. Both tools hit the bandwidth limit: 1 TB / 10 Gbps = 13.3 min, but that makes less of a title 😉. The whole benchmark was just hard to reproduce, the 1TB part of it made it unwieldy, but didn't matter. It could have done with a 100GB benchmark as the cardinality of the groups was just ~1800. Here is the Polars query: https://github.com/danielbeach/duckdbAndDaftEat1TB/pull/1 So I guess... Code or it didn't happen. Original tweet: https://x.com/RitchieVink/status/2012148362670149716

RT vLLM When we added support for gpt-oss, the Responses API didn't have a standard and we essentially reverse-engineered the protocol by iterating and guessing based on the behavior. We are very excited about the Open Responses spec: clean primitives, better tooling, consistency for the win! Original tweet: https://x.com/vllm_project/status/2012015593650536904

Today we’re announcing Open Responses: an open-source spec for building multi-provider, interoperable LLM interfaces built on top of the original OpenAI Responses API. ✅ Multi-provider by default ✅ Useful for real-world workflows ✅ Extensible without fragmentation Build

View quoted postReally interesting stuff happening on that other social network thanks to the open protocols and hackable algorithms. E.g there's Personalized Academic Recommendations and a whole paper about it. https://arxiv.org/abs/2601.04253

We ended up turning this into a product BTW :) https://solve.it.com/

Most coding happens in a dark IDE, but most thinking happens in a notebook. In this excerpt from a popular episode of Gradient Dissent, @jeremyphoward, co-founder of @fastdotai, explains how he is bridging that gap by using Jupyter Notebooks to build an integrated system for

View quoted postRT Charles 🎉 Frye There was a flippening in the last few months: you can run your own LLM inference with rates and performance that match or beat LLM inference APIs. We wrote up the techniques to do so in a new guide, along with code samples. https://modal.com/docs/guide/high-performance-llm-inference Original tweet: https://x.com/charles_irl/status/2011484220032762114

RT @levelsio My #1 feature request for Claude Code should add is stop asking me every time for confirmation by default, like "can I check this folder", yes brother you can do anything you want Like maybe for writing ask me permission Add some [ just go ] mode Even with [ accept edits on ] it still asks me permission 1000 times per day I just want you to run and keep going mostly And no I don't feel like running it with --dangerously-skip-permissions Original tweet: https://x.com/levelsio/status/2011129631001170244

RT Greg Kamradt In ARC Prize 2024, MindsAI (@MindsAI_Jack et al) used test time fine tuning to get to the top of the leaderboard during the competition 100 models trained for 100 tasks Hearing how it worked was a peak into the future of continual learning Original tweet: https://x.com/GregKamradt/status/2010892517420699891

LLM memory is considered one of the hardest problems in AI. All we have today are endless hacks and workarounds. But the root solution has always been right in front of us. Next-token prediction is already an effective compressor. We don’t need a radical new architecture. The

RT Jane Manchun Wong I cry a little whenever I realize another novel software doesn’t come with the UNIX-style manual page, before I look at the sky and scream at the clouds 🥲 Original tweet: https://x.com/wongmjane/status/2010871340736397317

RT Daniel Litt IMO it should be considered quite rude in most contexts to post or send someone a wall of 100% AI-generated text. “Here, read this thing I didn’t care enough about to express myself.” Original tweet: https://x.com/littmath/status/2010759165061579086

Anyone using Wezterm? I've been having quite a few issues with Ghostty recently (particularly with trying to paste more then a few lines of text in—it often truncates) so wondering about trying something else. I've used alacritty before, and liked it. https://wezterm.org/

RT John Robinson llmsdottxt An open source Chrome extension that detects llms.txt files on websites as you browse around and makes it easy to discover and copy the URLs or content for use with your favorite LLM. @jeremyphoward proposed llms.txt as a way for websites to provide LLM friendly content that can be more to the point and more "context efficient" for LLMs to consume. Great for API docs and more. https://github.com/johnrobinsn/llmsdottxt_chrome Original tweet: https://x.com/johnrobinsn/status/2009256273242673612

RT Dave Guarino The new http://Maryland.gov has an llms.txt! (Hey @jeremyphoward!) Original tweet: https://x.com/allafarce/status/2009011874273350136

How useful is llms.txt? It's so useful that Tailwind rejected a PR to add an llms.txt, on the basis that it would be so useful that people wouldn't need to read their docs any more! https://github.com/tailwindlabs/tailwindcss.com/pull/2388

Great to see @threejs supporting llms.txt. 😀 I have noticed that the majority of libs/services I work with nowadays seem to have an llms.txt nowadays. Makes me happy! (I've been really enjoying the very comprehensive @GeminiApp llms.txt recently for writing Gemini code.)

RT Jason Rosenfeld I was able to find some old examples from DeepLyrics, the fine-tuned version of ULMFiT we made back in 2018 to generate song lyrics for a grad school project. A primitive LLM, if you will. @jeremyphoward pre-trained the AWD-LSTM (~250M params?) on Wikitext-103 for its "base knowledge" and world understanding. Then, we gathered a large corpus of song lyrics, cleaned everything up and started to fine-tune the model to learn how to write the songs, including titles and genres. The model learned to add its own "[INTRO]/[BRIDGE]" and "(Album Version)" tags to the text, which--at the time--was pretty mind-blowing. We settled on a cool implementation of beam-search for our "inference-time scaling" as well. In this way, we explored a graph of outputs by continuing to extend the most promising ones and selecting the very best one at the end. It learned to interpolate between genres and you could force it to write, for example, an "Oldies Metal" song. I remember being blown away by the song "Sparkling Damnation: Feel the sparkle in your eyes, Savage in tragedy" ✨💀 The model learned stanza structure, rhyme scheme, and far more than I would have thought, completely on its own. Seeing this behavior from the model is what made it clear that scaling was going to work. If you continued to scale, training on the correct data, more and more complex emergent features and sophisticated behaviors (including things like deception, self-modeling, etc.) would percolate in the network. At the time, we had also experimented with making the model multi-modal by adding in audio waves as well, but, as we learned and many else are learning, native multimodality is hard. Original tweet: https://x.com/jrosenfeld13/status/2008561850502291736

@jeremyphoward 2018 Fine-tuning ULMFiT on song lyrics (DeepLyrics, grad school project) Watching it learn to rhyme was when I knew scaling was going to work

View quoted postRT Xeophon And I was right!! IQuest-Coder was set up incorrectly and includes the whole git history, including future commits. The model has found this trick and uses it rather often. Thus, its SWE-bench score should be discarded. Original tweet: https://x.com/xeophon/status/2006969664346501589

Your timeline will be full of this image. If you believe this is a real model, I have a bridge to sell to you. For starters, they don’t disclose how they run those evals, which is a huge red flag. But good luck to the poor soul who’ll get nerdsniped by this.

View quoted postHyperparams and architectures are *far* more stable across model and data sizes than most people think. For stuff that does need to change, we have pretty reliable rules of thumb for nearly all of them. (eg: OpenAI did nearly all their GPT4 ablations on ~1000x smaller models.)

In the last 6 months the NanoGPT Speedrun to 3.28 loss on FineWeb dropped by 33% to 2mins. Recently a subset of these changes were bulk copy-pasted to the larger-scale 2.92 loss track. Surprisingly, the untuned yolo run broke the 2.92 loss record by 25%. https://github.com/KellerJordan/modded-nanogpt/pull/188

RT Ziming Liu New year's read 📔 -- "Physics of AI Requires Mindset Shifts." I argue that "Physics of AI" research is hard due to the current publishing culture. But there is a simple solution -- curiosity-driven open research. https://kindxiaoming.github.io/blog/2025/physics-of-ai/ Original tweet: https://x.com/ZimingLiu11/status/2006810684546494522

RT George Grigorev residuals in transformers are great for stability and scaling; deeper layers update the signal along the residual stream. few people questioned this choice publicly, and since 2025 there's been progress. few thoughts about hyper connections (wrt the newly released DeepSeek paper – mHC) - introduction. residuals – x_{𝑙+1} = x_{𝑙} + F(x_{𝑙}, W_{𝑙}) hyper connections – x_{𝑙+1} = H_res_{𝑙} @ x_{𝑙} + H_post_{𝑙}^T @ F(H_pre_{𝑙} @ x_{𝑙}, W_{𝑙}), where W_{𝑙} has shape [c, n * c] – expands features after attention H_res_{𝑙} has shape [n, n] – mixes up the residual stream H_pre_{𝑙} has shape [1, n] – aggregates features from n * c back to c H_post_{𝑙} has shape [1, n] – maps the layer back into the stream. so this is basically residuals when your function F (self-attention + MLP) increases the number of channels, and you add some mappings. whale later adds an extra layer of math for manifold regularization so that hyper connections, when stacked up together, preserve the identity-mapping property, as is naturally done in simple channel-preserving residual connections. They perform large-scale training, wrote mixed-precision fused kernels in tilelang and proved that this is a viable approach. - my thoughts. 1) this naturally flashes back to the original resnet design, where you had to add 1d conv to shrink / expand channel size while doing natural convnet design (you normally increase channels and decrease spatial dim after conv, so you have to design residuals with channel mapping) 2) this also complements the value residuals idea, that re-using older values, when multiplied by learned residual constants in a self-regularizing fashion, noticeably improves scores while adding negligible impact on computation. Even derivation of input-dependent coefficients is similar. This idea seems like a generalization. 3) expand factor in MLP now seems to be slightly redundant, since we are doing similar work in the residual stream? or at least it ...

RT Pol Avec Why you should write the code to use your Thermostat Yesterday, I tried following @karpathy 's lead and use CC to interface with my thermostat. The experience wasn't great for me. Today I tried the opposite, I built it step-by-step almost completely manually using @answerdotai 's SolveIT. It was great. I spent a much longer time, say maybe 4-5h. But it was worth it, even though I have a perfectly fine app already to handle the temperature (in other words, I might not reuse the code again). So, was worth it? Yes, why? Because there were a myriad small decisions and lessons learned throughout the process. Those are usually small enough and that don't feel significant but they do compound in the end. They make your tools much sharper. If you look at the final package I published to pypi you won't see that, and it looks like code an LLM could one-shot, the LLM could definitely NOT make you learn during the process. This is more or less how it went: Well, I started getting SolveIT to read the docs for me and list which endpoints does the Thermostat API have. Unfortunately, their docs require JS to render 😅. I took this chance to have a look a Zyte service to give SolveIT a tool to read the docs. With that setup I got a list of all endpoints for the API. I followed the instructions to get the API keys & tokens. Gave it a try, it worked, I went away, and when I came back the token had expired. No problem, solveIT created a super short _refresh method as part of the class. Next step, create the basic "Home Status" info endpoint. But call _refresh first to make sure we have an updated token... I thought we would have to do this for all endpoints, so let's actually create a `_request` that does this for us already. That way the methods simply look like this: At this point I took the chance to practice using dialoghelper. I had created two endpoints following a clear format: first markdown header, @ patch method into the class, try it out and dis...

I tried this too by asking CC to connect to my netatmo thermostat. CC spent a huge amount of tokens scanning ports, using nmap, arp, dsn, web searching for my thermostat's brand MAC prefix, etc... It did find my thermostat MAC address, which looked cool. Later, it walked me

RT Alexia Jolicoeur-Martineau Many grifters claim that you dont gain anything by working without LLM. They say its comparable to not using a calculator. Yet thats how TRM was so successful and won the ARC prize. It does matter. One high quality output beats multiple low quality outputs. Original tweet: https://x.com/jm_alexia/status/2005269635265110463

"Skill issue" is having to use AI to do programming tasks that you would have been able to do before. You don't need to write more code, you need to focus on the minimum code you need to solve your specific problem.

View quoted postMass unsolicited email is good, apparently. (I got this email too FWIW. It really was very annoying.)

I think Simon is wrong here, as is Rob Pike. If you get an unsolicited email, it is your responsibility as a professional laptop user to know how to deal with that. The year is 2025. Unsolicited email has existed for half a century. If your time is wasted by receiving one

RT Muyu He We previously found qwen3 surprisingly selects **only three** MLP down projection vectors to create attention sinks, and now we find that this selection has an important feature: The model intelligently assigns a very large intermediate activation for token 0, which goes into down projection to create an unusually massive MLP output. We find that this strategy guarantees that the total output of the current layer (L=6) embeds an important cue for attention sinks, which the next layer (L=7) surfaces and creates the sink for the first time in the model. Observations: - The intermediate activation z of token 0 is 2-3 orders of magnitudes larger than that of token 1 and 8. - 99% of the large activation is explained by only three dimensions for token 0, which means that it choose the three special column vectors each with a huge scalar. - Since the three vectors are aligned in one dimension, this linear combination essentially creates an reinforcement on that direction, which produces an MLP output 4 orders of magnitude larger than that of other tokens. Effects: - The output of layer 6 is the addition of the residual input, the attention output and the MLP output. - As we can see, only in token 0 does the MLP output have a 3 orders of magnitude larger norm than the attention and residual components. This means for token 0, the MLP output will dominant the direction and norm of the total output. - We also see that the directional variance, or "spread" of all sampled MLP outputs is two orders of magnitude lower than that of the other tokens. - The extremely low variance means that once we apply layer norm next layer, only token 0's input to the attention layer will surface a consistent single direction that maps deterministically to a single key vector direction. We have previously shown this direction to be the exact "cue" that the query in layer 7 picks up to form attention sinks. So the intermediate activation achieves two birds with one ston...

RT Hunter📈🌈📊 Americans think about 43% of the world is wealthier than them. Wildly wrong. Americans who make the median full-time wage ($63,128) are in the top 3% of global incomes, adjusted for price differences. Even if they make the 10th percentile wage ($18,890) they’re top 24% globally. Original tweet: https://x.com/StatisticUrban/status/2004723924781892033

What's going on here? It sure looks like an AI image, w an unsolvable maze, artifacts no human would normally draw, inhuman tiling/plate patterns… Is the claim this was created to look like AI? Or there's a drawing program that does this without AI? Or did Apple get fooled?

So this is something I've been noticing more lately: people seeing an image, wrongly assuming it's AI, and then confidently raging about it being AI when it wasn't. Example: the replies to this Pluribus-themed image Tim Cook posted.

RT Peter Steinberger TIL adding your terminal here will greatly speed up compile times. https://nnethercote.github.io/2025/09/04/faster-rust-builds-on-mac.html Original tweet: https://x.com/steipete/status/2003925293665337501

http://fast.ai alums and ULMFiT users like Jason have been fine-tuning LLMs since 2017/18. The rest of y'all are years behind, sorry. 🤷

@jeremyphoward 2018 Fine-tuning ULMFiT on song lyrics (DeepLyrics, grad school project) Watching it learn to rhyme was when I knew scaling was going to work

View quoted postRT Andy Masley 15.65k messages will add to about the same emissions as a one time 10 mile drive in a sedan. Original tweet: https://x.com/AndyMasley/status/2003539421879239095

I feel like I need to make a donation to an environmental impact fund 😬

RT Chris Albon I bike everywhere in SF. I barely ever take a taxi/uber/waymo. But if you want to ban Waymo it means you don’t care about cyclists like me. Original tweet: https://x.com/chrisalbon/status/2003501793393967423

RT Lewis Tunstall ULMFiT was really ahead of its time, complete with the pre-train -> mid-train -> SFT pipeline we use today Original tweet: https://x.com/_lewtun/status/2003404158595158191

2017 Pre-training (ULMFiT) 😊

The LLM training eras: 202x Pre-training (foundation) 2022 RLHF + PPO 2023 LoRA SFT 2024 Mid-Training 2025 RLVR + GRPO

View quoted postRT Arnaud Bertrand This is largely being ignored but it's easily one of the biggest China news of the year. What China is doing with Hainan - a huge island (50 times the size of Singapore!) - is pretty extraordinary: they're basically making it into a completely different jurisdiction from the rest of the country, and an extremely attractive entry gate for the Chinese market. You can now import most products in the world (74% of all goods) entirely duty free into Hainan. And, if you transform the product and add 30% value locally, you can then send it to the rest of mainland China completely tariff-free. So for instance: import Australian beef into Hainan tax free. Slice it and package it for hotpot in Hainan: it can enter all mainland supermarkets duty-free. They also have insanely low corporate tax rates: 15%, lower than Hong Kong (16.5%) and Singapore (17%) or the rest of the mainland (25%). That's not all, Hainan now has different rules from the rest of China in dozens of areas: HEALTH: Basically the rule is that if a medicine or medical device is approved by regulatory agencies anywhere in the world, it can be used in Hainan - even if banned on the mainland. Which undoubtedly makes it THE place in the world with the widest range of medical treatments available. NO FIREWALL: Companies registered in Hainan can apply for unrestricted global internet access OPEN EDUCATION: Foreign universities can open campuses without a Chinese partner VISA-FREE: 86 countries get visa-free entry, probably one of the most open places in the world CAPITAL: Special accounts let money flow freely to and from overseas - normal mainland forex restrictions don't apply So they're running a pretty extraordinary "radical openness" experiment there. They're basically building a "greatest hits" of global free zones: Singapore's tax regime, Switzerland's medical access, Dubai's visa policy - all in one giant tropical island attached to the 1.4 billion people Chinese consumer mark...

China on Thursday launched island-wide special customs operations in the Hainan Free Trade Port (FTP), the world's largest FTP by area, allowing freer entry of overseas goods, expanded zero-tariff coverage and more business-friendly measures. http://xhtxs.cn/8VU

RT Luca Soldaini 🎀 can someone help folks at Mistral find more weak baselines to add here? since they can't stomach comparing with SoTA.... (in case y'all wanna fix it: Chandra, dots.ocr, olmOCR, MinerU, Monkey OCR, and PaddleOCR are a good start) Original tweet: https://x.com/soldni/status/2001821298109120856

RT Andy Masley Two big corrections which imo leave the reader with a much better understanding of where water’s being used, especially the introductory paragraph on water which now goes into detail on energy generation too. Really awesome. Grateful to Hao for her engagement here Original tweet: https://x.com/AndyMasley/status/2001520364635976075

We have confirmed the unit error in the government document, and I have issued a correction to my publisher. I have also made other updates based on the ongoing feedback. I detail these changes here: http://karendhao.com/20251217/empire-water-changes. Thank you to my readers for strengthening my book 🙏

View quoted postRT Andy Masley Once again, a story about data centers and water where the author very directly announces they are using a wildly deceptive framing for no reason. Original tweet: https://x.com/AndyMasley/status/2001317098295898395

Interesting (and surprising to me) discovery from one of our Solveit students: it turns out that frontier LLMs (or @AnthropicAI Opus 4.5 at least) can't create nets for platonic solids, when given a simple API. E.g here's its attempt at a tetrahedron:

RT Conor Rogers Need a word for the phenomenon where people think things used to be nicer because the nice things are the only things from past eras that got preserved or photographed. Original tweet: https://x.com/conorjrogers/status/2000306256217825494

RT hardmaru “Why AGI Will Not Happen” @Tim_Dettmers https://timdettmers.com/2025/12/10/why-agi-will-not-happen/ This essay is worth reading. Discusses diminishing returns (and risks) of scaling. The contrast between West and East: “Winner Takes All” approach of building the biggest thing vs a long-term focus on practicality. “The purpose of this blog post is to address what I see as very sloppy thinking, thinking that is created in an echo chamber, particularly in the Bay Area, where the same ideas amplify themselves without critical awareness. This amplification of bad ideas and thinking exuded by the rationalist and EA movements, is a big problem in shaping a beneficial future for everyone.” “A key problem with ideas, particularly those coming from the Bay Area, is that they often live entirely in the idea space. Most people who think about AGI, superintelligence, scaling laws, and hardware improvements treat these concepts as abstract ideas that can be discussed like philosophical thought experiments. In fact, a lot of the thinking about superintelligence and AGI comes from Oxford-style philosophy. Oxford, the birthplace of effective altruism, mixed with the rationality culture from the Bay Area, gave rise to a strong distortion of how to clearly think about certain ideas.” Original tweet: https://x.com/hardmaru/status/2000038674835128718

Why isn't Tukey more well-known? He's the godfather of data science. Coined the terms "bit", "software", and "exploratory data analysis". Made FFTs usable. And much more… https://en.wikipedia.org/wiki/John_Tukey

RT Simon Willison Tip for the Google Gemini team: if you want to help Google truly get ahead in the AI era, use your hefty influence to get it so setting up API access to your own calendar doesn't involve THESE steps Original tweet: https://x.com/simonw/status/1999670989077250159

Need a Google Calendar CLI that works well with agents? Here you go: https://github.com/badlogic/gccli

View quoted post