Georgi Gerganov

About

24th at the Electrica puzzle challenge | https://t.co/baTQS2bdia

Platforms

Content History

RT LM Studio Introducing LM Link ✨ Connect to remote instances of LM Studio, securely. 🔐 End-to-end encrypted 📡 Load models locally, use them on the go 🖥️ Use local devices, LLM rigs, or cloud VMs Launching in partnership with @Tailscale Try it now: https://link.lmstudio.ai Original tweet: https://x.com/lmstudio/status/2026722042347663779

RT Z.ai GLM-4.7-Flash-GGUF is now the most downloaded model on @UnslothAI. Original tweet: https://x.com/Zai_org/status/2021207517557051627

Activity on ggerganov/hnterm

ggerganov commented on an issue in hnterm

ggerganov commented on an issue in hnterm

View on GitHubActivity on ggerganov/hnterm

ggerganov commented on an issue in hnterm

ggerganov commented on an issue in hnterm

View on GitHubRT Xuan-Son Nguyen Qwen3-Coder-Next and Minimax-M2.1 are available on HF inference endpoints with the price of $2.5/hr and $5/hr respectively. With the context fitting supported, you can now utilize the largest context length possible for a given hardware. No more manual tuning -c option! Original tweet: https://x.com/ngxson/status/2020896739222282736

Activity on ggerganov/tmp2

ggerganov opened a pull request in tmp2

ggerganov opened a pull request in tmp2

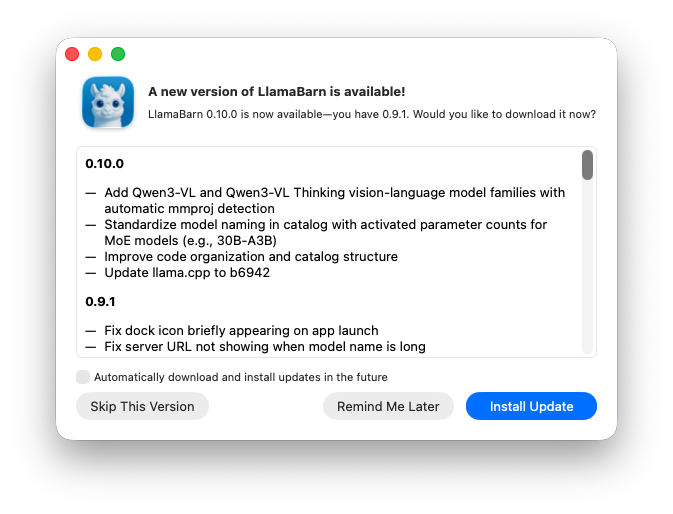

View on GitHubIntroducing LlamaBarn — a tiny macOS menu bar app for running local LLMs Open source, built on llama.cpp

RT Julien Chaumond 5️⃣ Original tweet: https://x.com/julien_c/status/2015804900525932663

RT Xuan-Son Nguyen Hugging Face Inference Endpoint now supports deploying GLM-4.7-Flash via llama.cpp, for as cheap as $0.8/hr Using Q4_K_M and 24k tokens context length - should be enough for most use case! Original tweet: https://x.com/ngxson/status/2015763148523897097

RT Xuan-Son Nguyen 🦙llama.cpp supports Anthropic's Messages API for a while now, with streaming, tool calling and reasoning support. Compatible with Claude Code. See more here: https://huggingface.co/blog/ggml-org/anthropic-messages-api-in-llamacpp Original tweet: https://x.com/ngxson/status/2013307175662223823

Excited about this!

LFM2.5-Audio-1.5B > Real-time text-to-speech and ASR > Running locally on a CPU with llama.cpp > Interleave speech and text It's super elegant, I'm bullish on local audio models

View quoted postRecent contributions by NVIDIA engineers and llama.cpp collaborators resulting in significant performance gains for local AI

Some neat QoL improvements coming to llama.cpp thanks to Johannes Gäßler https://github.com/ggml-org/llama.cpp/discussions/18049

RT Xuan-Son Nguyen Introducing: the new llama-cli 🦙🦙 > Clean looking interface > Multimodal support > Conversation control via commands > Speculative decoding support > Jinja fully supported Original tweet: https://x.com/ngxson/status/1998763208098853332

We joined forces with NVIDIA to unlock high-speed AI inference on RTX AI PCs and DGX Spark using llama.cpp. The latest Ministral-3B models reach 385+ tok/s on @NVIDIA_AI_PC GeForce RTX 5090 systems. Blog: https://developer.nvidia.com/blog/nvidia-accelerated-mistral-3-open-models-deliver-efficiency-accuracy-at-any-scale/

RT Lysandre Transformers v5's first release candidate is out 🔥 The biggest release of my life. It's been five years since the last major (v4). From 20 architectures to 400, 20k daily downloads to 3 million. The release is huge, w/ tokenization (no slow tokenizers!), modeling & processing. Original tweet: https://x.com/LysandreJik/status/1995558230567878975

RT Jeff Geerling Just tried out the new built-in WebUI feature of llama.cpp and it couldn't be easier. Just start llama-server with a host and port, and voila!

RT Georgi Gerganov Initial M5 Neural Accelerators support in llama.cpp Enjoy faster TTFT in all ggml-based software (requires macOS Tahoe 26) https://github.com/ggml-org/llama.cpp/pull/16634

Initial M5 Neural Accelerators support in llama.cpp Enjoy faster TTFT in all ggml-based software (requires macOS Tahoe 26) https://github.com/ggml-org/llama.cpp/pull/16634

RT Emanuil Rusev Re @fishright @ggerganov Just pushed a fix for this — this is what first launch is going to look like in the next version.

RT clem 🤗 When you run AI on your device, it is more efficient and less big brother and free! So it's very cool to see the new llama.cpp UI, a chatgpt-like app that fully runs on your laptop without needing wifi or sending any data external to any API. It supports: - 150,000+ GGUF models - Drop in PDFs, images, or text documents - Branch and edit conversations anytime - Parallel chats and image processing - Math and code rendering - Constrained generation with JSON schema supported Well done @ggerganov and team!

RT yags llama.cpp developers and community came together in a really impressive way to implement Qwen3-VL models. Check out the PRs, it’s so cool to see the collaboration that went into getting this done. Standard formats like GGUF, combined with mainline llama.cpp support ensures the models you download will work anywhere you choose to run them. This protects you from getting unwittingly locked into niche providers’ custom implementations that won’t run outside their platforms.Qwen: 🎉 Qwen3-VL is now available on llama.cpp! Run this powerful vision-language model directly on your personal devices—fully supported on CPU, CUDA, Metal, Vulkan, and other backends. We’ve also released GGUF weights for all variants—from 2B up to 235B. Download and enjoy! 🚀 🤗 Link: https://x.com/Alibaba_Qwen/status/1984634293004747252

RT Qwen 🎉 Qwen3-VL is now available on llama.cpp! Run this powerful vision-language model directly on your personal devices—fully supported on CPU, CUDA, Metal, Vulkan, and other backends. We’ve also released GGUF weights for all variants—from 2B up to 235B. Download and enjoy! 🚀 🤗 Hugging Face: https://huggingface.co/collections/Qwen/qwen3-vl 🤖 ModelScope: https://modelscope.cn/collections/Qwen3-VL-5c7a94c8cb144b 📌 PR: https://github.com/ggerganov/llama.cpp/pull/16780

RT Vaibhav (VB) Srivastav BOOM: We've just re-launched HuggingChat v2 💬 - 115 open source models in a single interface is stronger than ChatGPT 🔥 Introducing: HuggingChat Omni 💫 > Select the best model for every prompt automatically 🚀 > Automatic model selection for your queries > 115 models available across 15 providers including @GroqInc, @CerebrasSystems, @togethercompute, @novita_labs, and more Powered by HF Inference Providers — access hundreds of AI models using only world-class inference providers Omni uses a policy-based approach to model selection (after experimenting with different methods). Credits to @katanemo_ for their small routing model: katanemo/Arch-Router-1.5B Coming next: • MCP support with web search • File support • Omni routing selection improvements • Customizable policies Try it out today at hf[dot] co/chat 🤗

simpleDavid Finsterwalder | eu/acc: Important info. The issue in that benchmark seems to be ollama. Native llama.cpp works much better. Not sure how ollama can fail so hard to wrap llama.cpp. The lesson: Don’t use ollama. Espacially not for benchmarks. Link: https://x.com/DFinsterwalder/status/1978372050239516989